Fault Tolerance of Data Pipelines

In the context of data pipelines, fault tolerance refers to how the pipeline behaves in case an executable node fails. Depending on your settings, you can either terminate the pipeline when a node fails or allow the remaining nodes to execute, skipping the failed node.

What are executable nodes in Data Pipeline Studio?

In Data Pipeline Studio, a job is created for each executable node of a pipeline. This includes tasks like data integration, data transformation, data quality and so on. As a result, multiple jobs are created within a single data pipeline depending on the stages included. The more complex the pipeline (with many stages and multiple jobs), the greater the need for fault tolerance. With this setting in place, in the event of failure of one node the entire pipeline does not fail.

Settings for Fault Tolerance

When configuring a job for a data pipeline, you can choose a fault tolerance option that determines how failures are handled. These options control the status of the failed node, any subsequent nodes, and the overall pipeline. Below are the available options for fault tolerance configuration:

| Option | Description | Example |

|---|---|---|

| Default |

If a node fails, subsequent nodes are placed in a pending state, and the overall pipeline shows a failed status. |

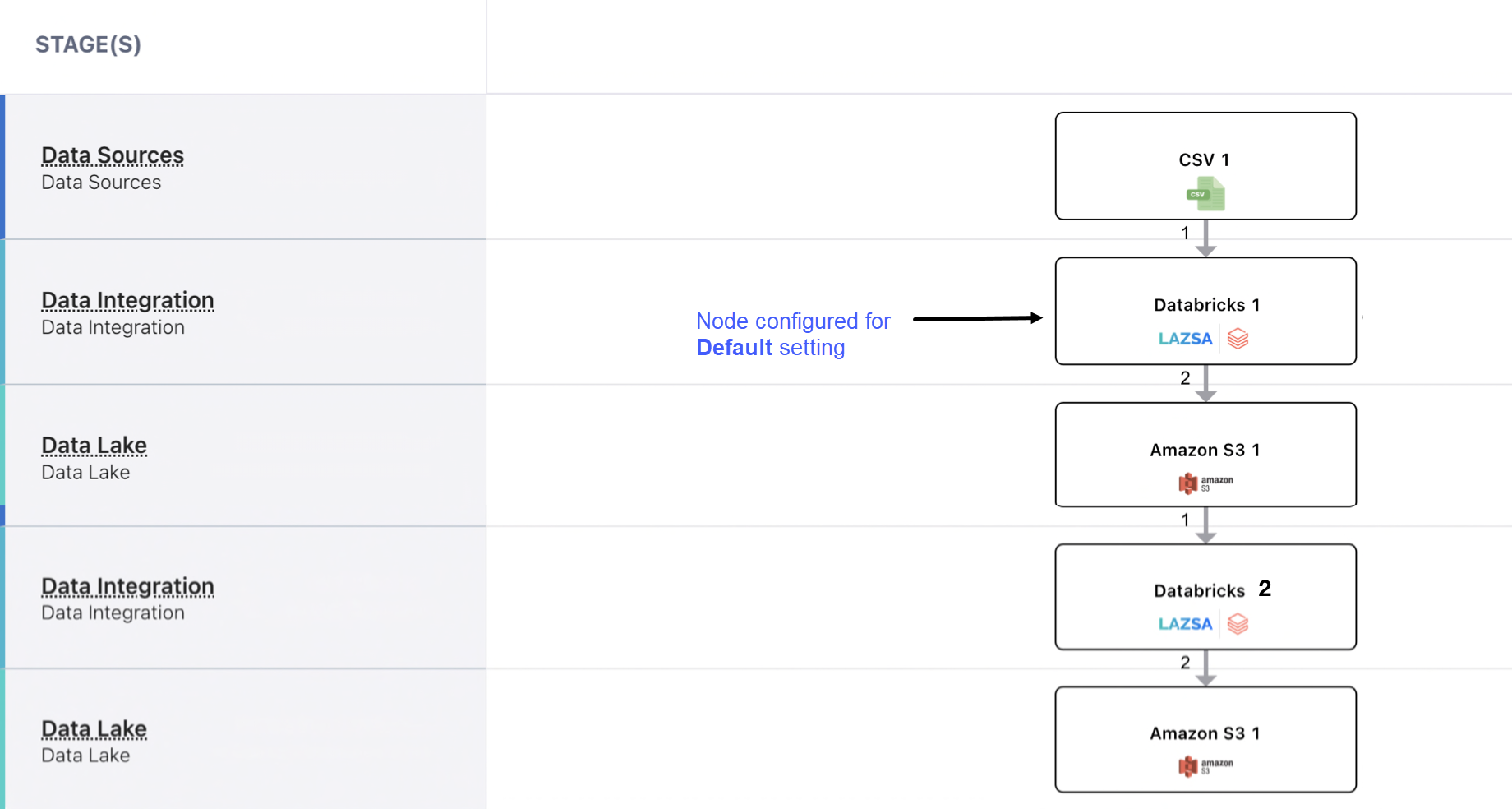

Default setting.

In the above pipeline if Databricks 1 fails, Databricks 2 goes into pending state and the pipeline is marked as failed.

|

| Skip on Failure |

If a node fails, subsequent nodes are skipped. However, nodes on other branches of the pipeline will still execute. |

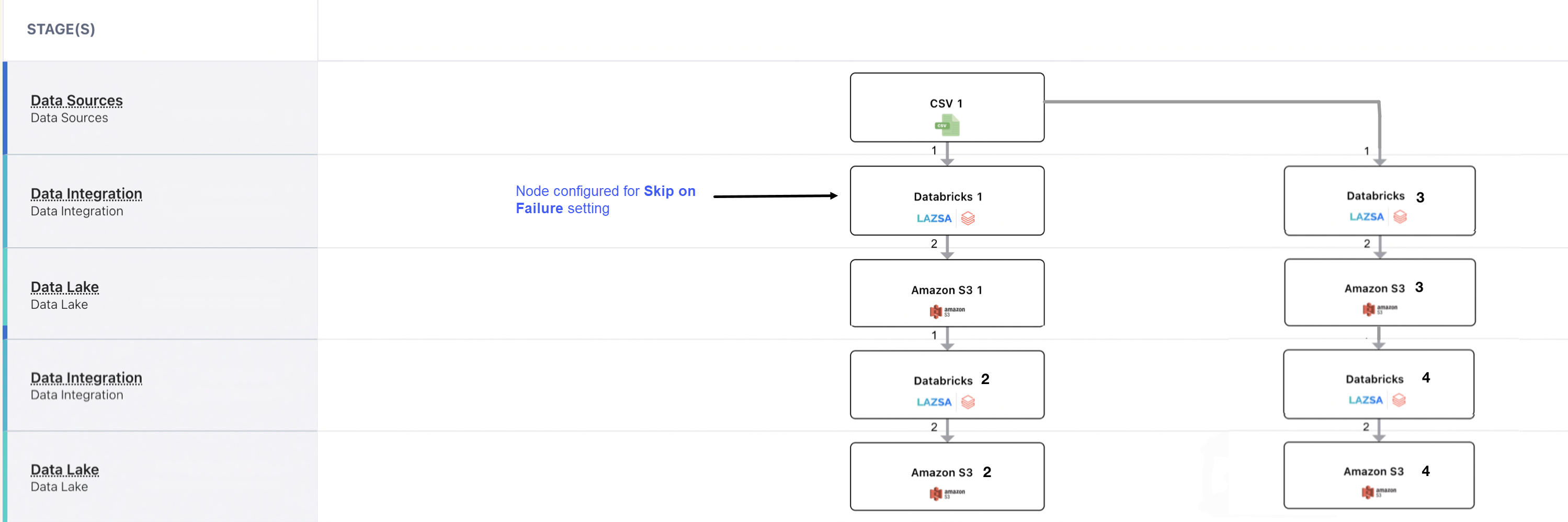

Skip on Failure setting

In the above pipeline if Databricks 1 fails, then Databricks 2 is skipped for execution. However, Databricks 3 and Databricks 4 which are on a separate branch, are still executed.

|

| Proceed on Failure |

If a node fails, subsequent nodes will continue execution, regardless of the failure. This allows the pipeline to proceed, either successfully or with some failures. |

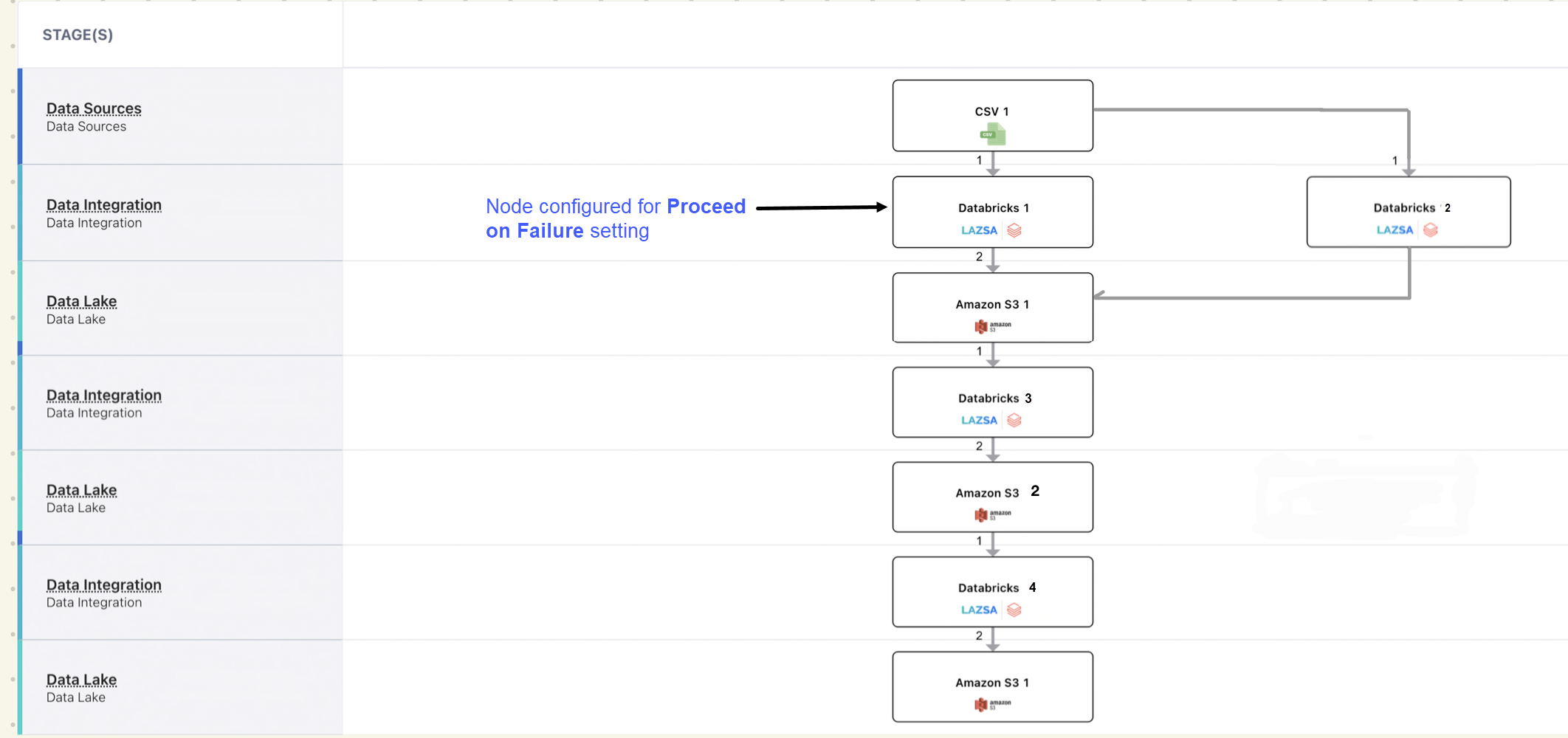

Proceed on Failure setting

Here even if Databricks 1 fails, the remaining nodes (Databricks 2, Databricks 3, and Databricks 4) are executed allowing the pipeline to continue. |

As outlined in the table above, the fault tolerance option you choose defines the behaviour of individual nodes, the subsequent nodes, and the overall pipeline. Setting appropriate fault tolerance for executable nodes ensures that even if a specific node fails, the remaining nodes of the pipeline can execute, helping to maintain business continuity and minimizing the impact of failures.

| What's next? Create a Data Pipeline |