Managing Deployment in a Kubernetes Cluster

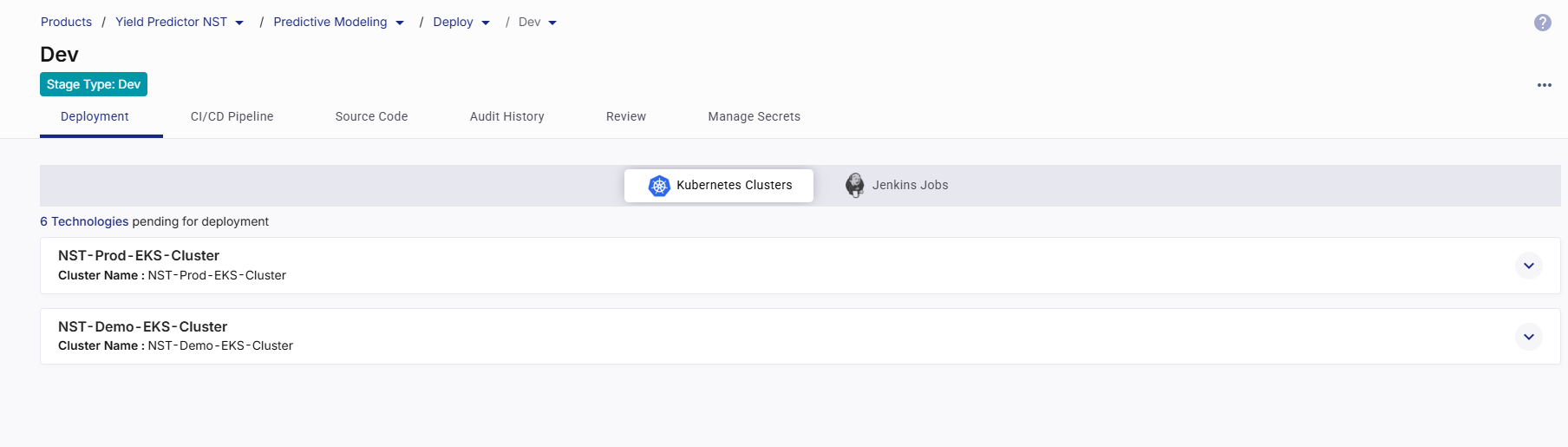

When configuring the deployment details in a deployment stage, if you select the Kubernetes deployment mode, the Kubernetes Clusters section is active on the Deployment tab within that stage. The Kuberentes clusters that you select during stage configuration are listed in the Kubernetes Clusters section.

To manage your deployments in your desired Kubernetes cluster from this section, do the following:

Configure Ingress Controller

To enable external access to services deployed in your Kubernetes cluster, you must configure an ingress controller for the cluster. Before you start adding technologies for deployment, you must ensure that details of your ingress controller are configured in the Calibo Accelerate platform.

Generally, your administrator configures a global ingress controller for all the deployments within a specific Kubernetes cluster. This saves you time and effort to configure an ingress controller while deploying technologies in the cluster at the feature level.

However, if your administrator hasn't configured a global ingress controller, you must configure one for your Kubernetes cluster.

In the cluster tile, click Configure and then, in the Configure Ingress Controller side drawer, do the following:

-

In the Controller Type dropdown, select the ingress controller type you want to configure. Available options include:

-

application-gateway (Azure-specific)

Azure Application Gateway Ingress Controller (AGIC) allows Application Gateway to expose Kubernetes services over HTTP/S. It integrates directly with Azure networking and provides advanced features such as SSL termination, path-based routing, and Web Application Firewall (WAF) capabilities.

-

Click Configuration Instructions to open the official Azure documentation for AGIC setup.

-

Typically used with AKS clusters.

-

-

nginx

NGINX Ingress Controller is a widely used open-source option to manage external access to services in Kubernetes clusters. It supports advanced traffic management features such as rate limiting, SSL termination, URL rewrites, and

custom routing rules.

-

Click Configuration Instructions to view the installation steps for NGINX ingress.

-

Compatible with both EKS and AKS clusters.

-

-

alb (AWS-specific)

AWS ALB Ingress Controller (also known as AWS Load Balancer Controller) allows the use of AWS Application Load Balancers to route traffic to Kubernetes workloads. It supports dynamic provisioning of ALBs, path-based routing, SSL termination, and integration with AWS security features.

-

Click Configuration Instructions to open the AWS documentation for setting up the ALB ingress controller.

-

Specifically designed for EKS clusters.

-

-

-

Ingress Class

Select an Ingress Class from the list.

This class identifies the ingress controller responsible for managing ingress resources in the cluster.

Ingress classes let you define routing rules, host/path configurations, and traffic control for services deployed in Kubernetes.

-

IP / DNS Address Configuration

For the Application Gateway and NGINX controller types, in the IP / DNS Address section, select how you want to provide the address of the ingress controller:

-

Ingress Hostname: Provide the DNS hostname (for example,

app.acme.com) that resolves to your ingress controller. The hostname must start and end with a letter or number in lower case and may include letters (in lower case), numbers, "-", and "."

Important:

For the deployed technology’s live URL to work correctly, ensure the DNS hostname is properly configured in your DNS provider’s settings with an appropriate A or CNAME record pointing to your ingress controller.

-

Ingress Controller Address: Provide the external IP address or load balancer IP exposed by the ingress controller.

-

-

If you select alb as the controller type, provide the following:

-

Ingress Group Name (Optional):

Specify a group name to associate multiple ingress resources with the same ALB. All ingress resources with this group name share a single ALB.

-

Ingress Hostname (Optional)

Provide the hostname that routes to the ALB.

Ensure corresponding DNS configuration is in place for successful routing.

-

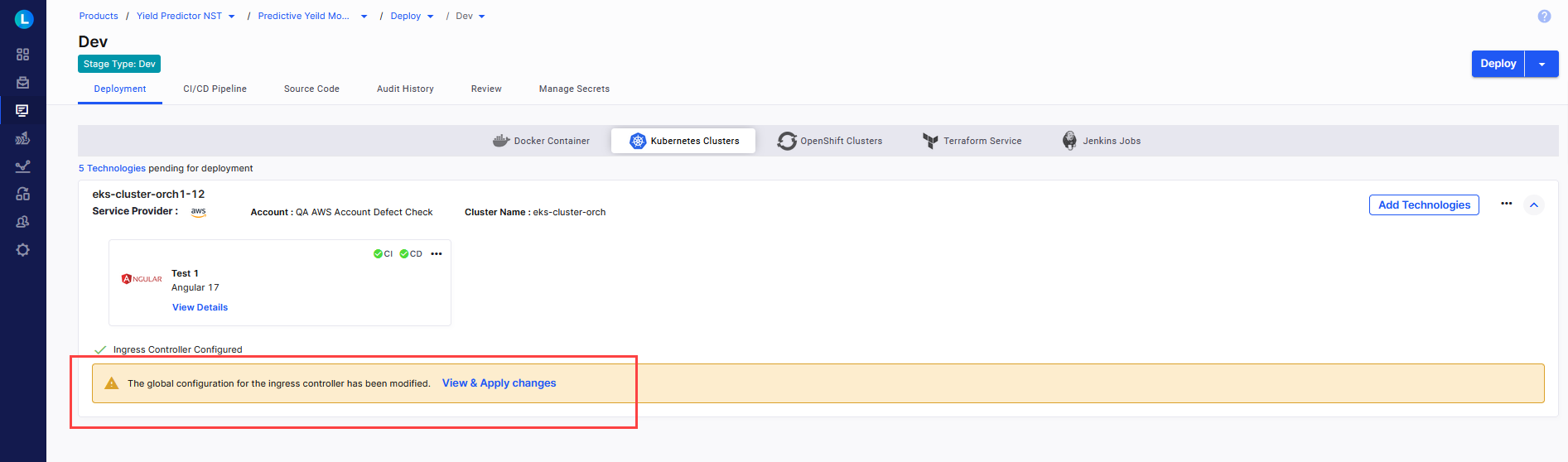

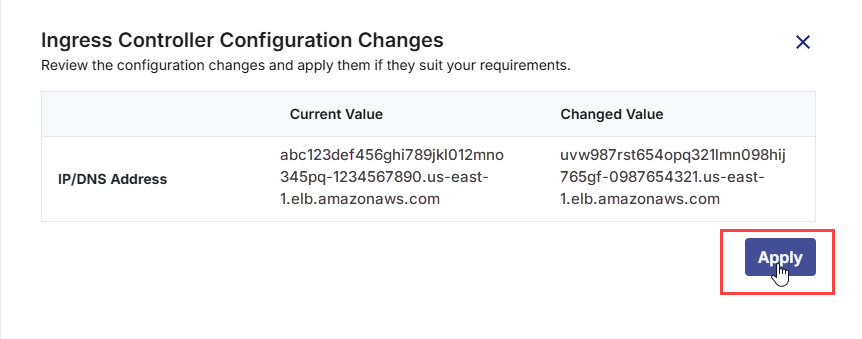

View and Apply Global Ingress Controller Configuration Changes

After your administrator edits the global ingress controller configuration, a message informing about the configuration changes is displayed at the stage level where the related Kubernetes cluster is used for deployments.

Click View & Apply Changes.

In the side drawer, review the changes and click Apply.

View or Edit Ingress Controller Configuration

In the cluster tile, click the ellipsis in the right beside the accordion, and then click View Ingress Controller Configuration.

Review the ingress controller configuration details.

If you are viewing the configured details of the global ingress controller and if your administrator has allowed modifications to the ingress controller configuration details at the stage level, you can edit these details.

You can always edit the details of the ingress controller that you have configured at the stage level.

Note:

Editing ingress controller configuration may affect your existing technology deployments. You may need to redeploy the technologies for products that use the ingress controller configuration you edit.

To edit ingress controller details, do the following:

-

In the Configure Ingress Controller side drawer, click

.

. -

You can select a different ingress controller type and class and modify the ingress controller address or host name as per your requirements.

For detailed configuration steps, see Configure Ingress Controller.

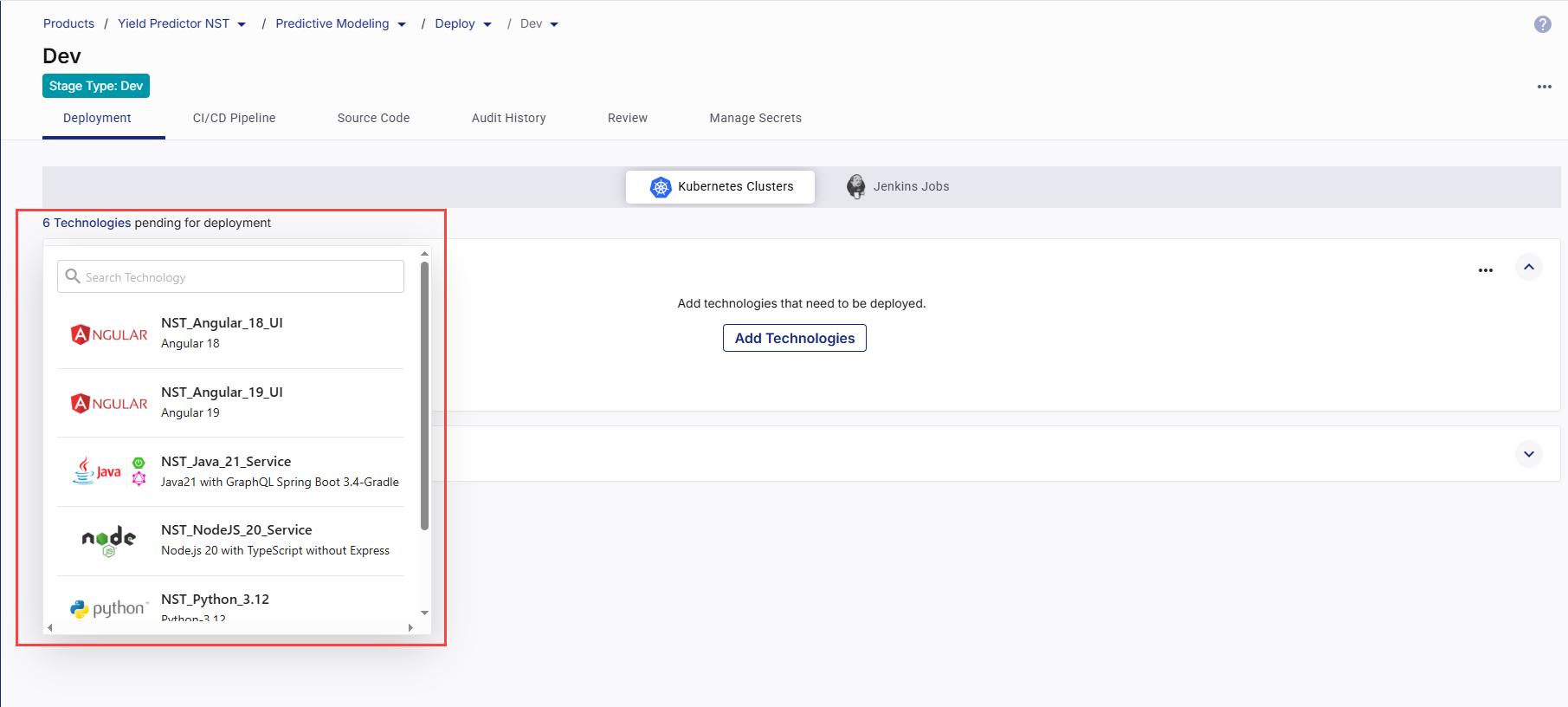

View Technologies Pending for Deployment

In the Kubernetes Clusters section, in the upper left corner, the list of ready-to-deploy technologies that you have added in the Develop phase is displayed. You can search for your desired technology in the list by the technology name or its title that you give while adding it in the Develop phase.

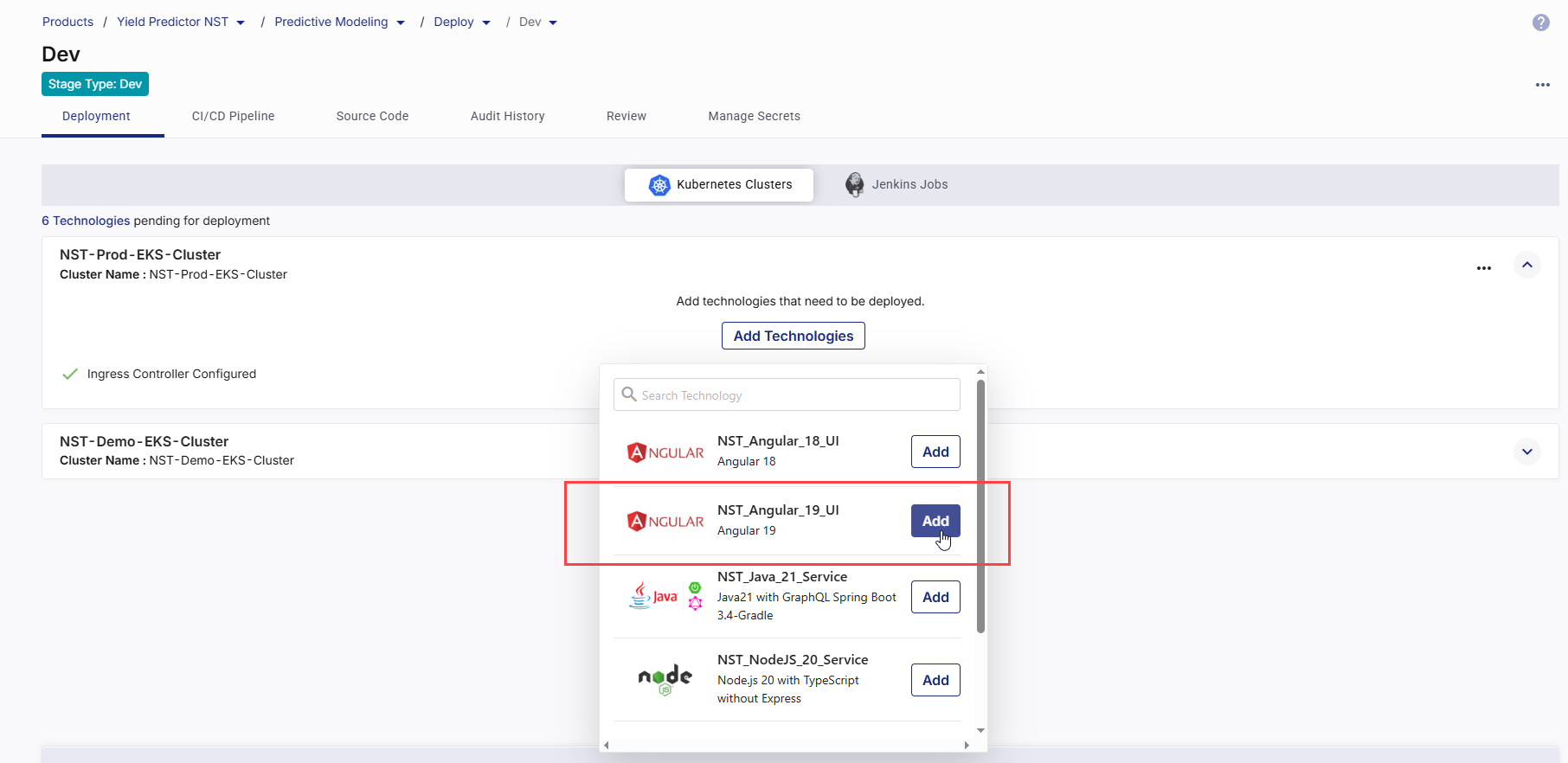

Add Technologies to Be Deployed

In the Kubernetes Cluster tile where you want to deploy technologies, click Add Technologies. To add a technology for deployment, click Add next to each desired technology .

-

Configure Deployment Specifications

In the side drawer, configure the following details required for the deployment. These settings control how the application is built, deployed, exposed, and tested in the target Kubernetes cluster.Depending on the CI/CD tool selected in your deployment stage configuration, some configuration options and the overall flow will vary. The following sections describe the configuration process separately for Jenkins and GitHub Actions.

If Jenkins Is Your CICD Tool

If Jenkins Is Your CICD Tool

Follow this section if your deployment stage is configured to use Jenkins for build and deployment automation. This process uses Jenkins jobs and pipelines, and the configuration fields available in the side drawer are tailored for Jenkins integration.

1. Specify Context Path

1. Specify Context Path

Specify the context path for your application. The context path allows you to access your application through a specific URL segment. For example, if you set the context path to

/app, users will access the application athttps://your-domain/app. This helps in routing traffic correctly within your application. 2. Configure Continuous Integration (CI) Settings

2. Configure Continuous Integration (CI) Settings

Continuous Integration automates the process of building, testing, and preparing your application whenever there is a code change or based on a defined schedule.

-

Source Code Branch

Select the specific branch from your source code repository that contains the code you want to deploy. This is the branch your CI pipeline will monitor for changes.

-

Trigger Types

The CI trigger determines when the pipeline should start:

-

Code Change – The pipeline runs automatically whenever changes are committed to the selected branch. Ideal for active development workflows.

-

Manual – The pipeline is triggered only when you explicitly start it from the platform. Suitable for controlled build scenarios.

-

Schedule – You can define one or more time-based schedules to run the CI pipeline automatically at specific times. Useful for periodic deployments and testing.

In each schedule, you can specify the following:

-

Time Zone: Select the desired time zone for the CI trigger schedule.

-

Frequency : Select the Every Day or Every Week option as required.

-

Time: Define when to trigger the pipeline (in hours and minutes). You can define multiple schedules as well.

Best Practice:

-

Use Code Change in development to get instant feedback on commits.

-

Use Manual in production to avoid unintentional deployments.

-

Use Schedule for batch updates or automated maintenance windows.

-

-

3. Configure Continuous Deployment (CD) Settings

3. Configure Continuous Deployment (CD) Settings

Continuous Deployment automates pushing your application to the Kubernetes cluster after a successful build.

-

Custom Helm Chart (Optional)

Enable this toggle if you want to deploy using your own Helm chart instead of the default generated by Calibo Accelerate. This option allows you to provide custom Kubernetes manifest configurations, giving you more control over workloads, service definitions, and resource specifications.

When you enable the toggle, you must configure the following fields:

-

Namespace

Select the Kubernetes namespace where your Helm chart should be deployed. Namespaces isolate resources for different environments or projects.

-

Helm Chart Repository

Select the repository containing your Helm chart. This can be a Git repository or a Helm chart repository configured in Calibo Accelerate. This repository must be pre-configured in the platform before selection. This ensures that the chart source is trusted and accessible.

-

Branch

Specify the branch of the Helm chart repository to use. This is useful for maintaining different chart versions (for example, main for stable releases, develop for ongoing changes).

-

Chart Path

Provide the path within the repository to the main Helm chart directory. This is typically where the

Chart.yamlfile is located. Example:charts/frontend-service -

Values Path

Specify the path to the

values.yamlfile (or a custom values file) within the repository. The values file defines configurable parameters for the chart, such as replica counts, image versions, environment variables, and resource limits.Example:

charts/frontend-service/values-prod.yaml

-

-

Deployment Configuration

-

Namespace

The namespaces available in the selected Kubernetes cluster are displayed in the list. Select the desired namespace where the workload should be deployed. Selecting the appropriate namespace ensures that your technology is deployed in the correct logical environment and helps you organize and manage resources effectively.

-

Replicas

Define the number of pod instances of your application to run. Using replicas helps in scaling your application for load balancing and fault tolerance. More replicas mean better handling of user requests and higher availability.

-

Environment Variables (Optional)

Click Add More to define one or more environment variables in key-value pairs. These will be injected into the container at runtime and can be used for configuration, such as database URLs, API keys, or feature flags.

-

-

Networking

-

Port Number – Enter the container port your application listens on (for example, 4200 for Angular development servers).

-

Service Type- Determines how your service will be exposed:

-

Cluster IP (default)

Exposes the service on a private, internal IP address that is accessible only within the Kubernetes cluster. This is the most common type for internal microservices, backend APIs, and workloads that do not require direct access from outside the cluster.

When to Use

-

Backend services communicating with other pods via internal networking.

-

Databases, caches, or APIs that should not be directly exposed to end users.

-

-

Node Port

Allocates a static port (range: 30000–32767) on each Kubernetes worker node. The service can then be accessed externally using <NodeIP>:<NodePort>. NodePort services are a layer on top of ClusterIP — they still get an internal ClusterIP address but also expose themselves externally.

When to Use

-

For short-term external testing without setting up ingress or a cloud load balancer.

-

When you want to manually route traffic via node IPs.

-

-

Load Balancer

Creates an external load balancer using your cloud provider’s native load balancing service (for example, AWS ELB/ALB, Azure Load Balancer). Assigns a public IP address or DNS name for global accessibility. Traffic to the load balancer is automatically routed to the service’s pods.

When to Use

-

For production-grade, internet-facing services requiring stable, scalable, and fault-tolerant access.

-

For APIs, web applications, or services that must be directly accessible to end users over the internet.

-

-

-

-

Ingress Configuration

When this toggle is enabled, an ingress resource will be created, and a live URL will be generated after deployment. This URL allows access to your application from outside the cluster without directly exposing node ports.

The Ingress toggle is available for Cluster IP and Node Port service types. It is not available for the Load Balancer service type, as Load Balancer already provisions a public endpoint through the cloud provider.

-

Resource Allocation

In this section, you need to define the minimum and maximum resource allocations for your containers. This ensures that your applications have the necessary resources to function correctly while also preventing them from consuming excessive resources that could impact other applications in the cluster.

- Requests

In Requests, you specify the minimum amount of CPU and memory resources that a container requires. The Kubernetes scheduler uses these values to make informed decisions about where to place containers within the cluster. Requests help ensure that containers have the resources they need to start and operate smoothly under typical conditions.Memory (in Megabytes)

Specify the amount of memory the application is guaranteed. Enter a numeric value greater than or equal to 128. Specifying memory request ensures that your application has enough memory to run smoothly. This helps the Kubernetes scheduler allocate resources efficiently.CPU (in Millicores)

Specify the amount of CPU the application is guaranteed. Enter a numeric value greater than or equal to 50. Specifying CPU request ensures that your application has enough processing power. This helps prevent performance issues by reserving necessary CPU resources.

- Limits

In Limits, you define the maximum amount of CPU and memory resources that a container can use. Limits protect the cluster from resource exhaustion by capping the amount of resources any single container can consume. This prevents containers from monopolizing resources, which could negatively impact the performance and stability of other applications in the cluster.Memory (in Megabytes)

Specify the maximum amount of memory your application can use. Enter a numeric value greater than or equal to 128. Defining a memory limit prevents your application from using more memory than allocated, which could otherwise lead to resource exhaustion and affect other applications running in the cluster.CPU (in Millicores)

Specify the maximum amount of CPU your application can use. Enter a numeric value greater than or equal to 50. Defining the CPU limit prevents your application from consuming more CPU resources than allocated, ensuring fair resource distribution among all applications in the cluster.

- Requests

- Trigger Types

The CD pipeline trigger determines when your application will be deployed to the Kubernetes cluster. It controls the execution of the Continuous Deployment process after the build (CI) phase.

Note:

The Run after Continuous Integration and Schedule triggers are effective only after the technology has been deployed manually from Calibo Accelerate. This ensures all Kubernetes resources, namespaces, and cluster configurations are properly set up before automated deployments can run. The Manual trigger does not have this dependency. Manual deployments are initiated directly by you, allowing the platform to verify and create any missing resources at the time of execution. In contrast, automated triggers run unattended and assume that the infrastructure is already provisioned and functional, which is why they require a successful manual deployment as a baseline.

-

Run after Continuous Integration

Automatically starts deployment immediately after the CI pipeline completes successfully. No manual action is required between build completion and deployment.

When to Use

-

Agile environments where code changes should be deployed as soon as they pass the build and test stages.

-

Lower environments (dev, qa) for continuous delivery and rapid feedback.

-

Automated validation workflows where every successful build should be deployed for verification.

Example

A React UI project deployed to a QA namespace as soon as the latest commit passes CI checks.

-

-

Manual

The deployment runs only when triggered manually from the Calibo Accelerate interface. This gives you complete control over when changes are promoted to the environment.

When to Use

-

Production environments requiring controlled, approved releases.

-

Cases where deployments must align with business timelines or maintenance windows.

-

Scenarios where manual QA or compliance review is required before release.

Example

A Java microservice that is deployed to production only after security and compliance teams approve the release.

-

-

Schedule

Runs deployment automatically at predefined intervals (for example, daily or weekly). Builds may occur more frequently, but deployments happen only on the set schedule.

In each schedule, you can specify the following:

-

Time Zone: Select the desired time zone for the CD trigger schedule.

-

Frequency : Select the Every Day or Every Week option as required.

-

Time: Define when to trigger the pipeline (in hours and minutes). You can define multiple schedules as well.

-

4. Functional Testing

4. Functional Testing

Enable the Functional Testing toggle to run automated test cases immediately after deployment. Configure the following fields:

-

Testing Tool – Select from configured testing tools (for example, Selenium).

-

Test Case Repository – Repository URL containing test scripts.

-

Branch – Branch of the test case repository.

-

Command – CLI command to execute the test cases.

If GitHub Actions Is Your CICD Tool

If GitHub Actions Is Your CICD Tool

Follow this section if your deployment stage is configured to use GitHub Actions for build and deployment automation. This process uses GitHub Actions workflows, and the configuration fields available in the side drawer are tailored for GitHub Actions integration.

1. Specify Context Path

1. Specify Context Path

Specify the context path for your application. The context path allows you to access your application through a specific URL segment. For example, if you set the context path to

/app, users will access the application athttps://your-domain/app. This helps in routing traffic correctly within your application. 2. Add Runner Labels

2. Add Runner Labels

-

What are runners in GitHub Actions?

In GitHub Actions, a runner is a server that executes the jobs defined in your workflow. Each runner listens for available jobs, runs them when triggered, and reports the results back to GitHub. There are two main types of runners:GitHub-hosted runners – Managed by GitHub, automatically updated, and preconfigured with popular tools and languages.

Self-hosted runners – Managed by you, allowing custom hardware, software, and network configurations.

-

What are runner labels?

Runner labels are tags or identifiers assigned to self-hosted runners to categorize them based on their characteristics, capabilities, or intended use. When you specify runner labels in your deployment configuration, the job will be assigned only to runners that match all the specified labels.

-

Specify all intended runner labels

Specify one or more runner labels to ensure that the GitHub Actions workflow is assigned to an appropriate runner.

The workflow job is assigned to a runner whose label set matches all specified labels. If no matching runner is available, the job remains in a pending state until one becomes available.

Tip:

Maintain a documented list of available runner labels in your project so developers know which labels to use.

3. Configure Continuous Integration (CI) Settings

3. Configure Continuous Integration (CI) Settings

Continuous Integration automates the process of building, testing, and preparing your application whenever there is a code change or based on a defined schedule.

-

Source Code Branch

Select the specific branch from your source code repository that contains the code you want to deploy. The CI pipeline monitors this branch for changes, builds the application, and prepares artifacts for deployment.

-

Trigger Types

The CI trigger defines when your GitHub Actions workflow should run. The Manual trigger type is selected by default and is required for Calibo Platform to trigger workflows. To add other trigger types, click +New Trigger.

-

Manual (Default and Mandatory)

-

Always added automatically.

-

Required by Calibo Accelerate to allow you to manually trigger the workflow from the platform if automated triggers are unavailable or fail.

-

Ensures that you can always deploy on demand, even if no code changes occur.

-

-

After Push

-

Triggers the workflow automatically whenever a commit is pushed to the selected branch.

-

Ideal for continuous delivery pipelines in lower environments.

-

-

Cron Schedule – You can define one or more time-based schedules to run the workflow automatically at specific times. Useful for nightly builds, weekly refreshes, or maintenance deployments.

In each schedule, you can specify the following:

-

Time Zone: Select the desired time zone for the CI trigger schedule.

-

Frequency : Select the Every Day or Every Week option as required.

-

Time: Define when to trigger the pipeline (in hours and minutes). You can define multiple schedules as well.

-

-

4. Configure Continuous Deployment (CD) Settings

4. Configure Continuous Deployment (CD) Settings

Continuous Deployment automates pushing your application to the Kubernetes cluster after a successful build.

-

Custom Helm Chart (Optional)

Enable this toggle if you want to deploy using your own Helm chart instead of the default generated by Calibo Accelerate. This option allows you to provide custom Kubernetes manifest configurations, giving you more control over workloads, service definitions, and resource specifications.

When you enable the toggle, you must configure the following fields:

-

Namespace

Select the Kubernetes namespace where your Helm chart should be deployed. Namespaces isolate resources for different environments or projects.

-

Helm Chart Repository

Select the repository containing your Helm chart. This can be a Git repository or a Helm chart repository configured in Calibo Accelerate. This repository must be pre-configured in the platform before selection. This ensures that the chart source is trusted and accessible.

-

Branch

Specify the branch of the Helm chart repository to use. This is useful for maintaining different chart versions (for example, main for stable releases, develop for ongoing changes).

-

Chart Path

Provide the path within the repository to the main Helm chart directory. This is typically where the

Chart.yamlfile is located. Example:charts/frontend-service -

Values Path

Specify the path to the

values.yamlfile (or a custom values file) within the repository. The values file defines configurable parameters for the chart, such as replica counts, image versions, environment variables, and resource limits.Example:

charts/frontend-service/values-prod.yaml

-

-

Deployment Configuration

-

Namespace

The namespaces available in the selected Kubernetes cluster are displayed in the list. Select the desired namespace where the workload should be deployed. Selecting the appropriate namespace ensures that your technology is deployed in the correct logical environment and helps you organize and manage resources effectively.

-

Replicas

Define the number of pod instances of your application to run. Using replicas helps in scaling your application for load balancing and fault tolerance. More replicas mean better handling of user requests and higher availability.

-

Environment Variables (Optional)

Click Add More to define one or more environment variables in key-value pairs. These will be injected into the container at runtime and can be used for configuration, such as database URLs, API keys, or feature flags.

-

-

Networking

-

Port Number – Enter the container port your application listens on (for example, 4200 for Angular development servers).

-

Service Type- Determines how your service will be exposed:

-

Cluster IP (default)

Exposes the service on a private, internal IP address that is accessible only within the Kubernetes cluster. This is the most common type for internal microservices, backend APIs, and workloads that do not require direct access from outside the cluster.

When to Use

-

Backend services communicating with other pods via internal networking.

-

Databases, caches, or APIs that should not be directly exposed to end users.

-

-

Node Port

Allocates a static port (range: 30000–32767) on each Kubernetes worker node. The service can then be accessed externally using <NodeIP>:<NodePort>. NodePort services are a layer on top of ClusterIP — they still get an internal ClusterIP address but also expose themselves externally.

When to Use

-

For short-term external testing without setting up ingress or a cloud load balancer.

-

When you want to manually route traffic via node IPs.

-

-

Load Balancer

Creates an external load balancer using your cloud provider’s native load balancing service (for example, AWS ELB/ALB, Azure Load Balancer). Assigns a public IP address or DNS name for global accessibility. Traffic to the load balancer is automatically routed to the service’s pods.

When to Use

-

For production-grade, internet-facing services requiring stable, scalable, and fault-tolerant access.

-

For APIs, web applications, or services that must be directly accessible to end users over the internet.

-

-

-

-

Ingress Configuration

When this toggle is enabled, an ingress resource will be created, and a live URL will be generated after deployment. This URL allows access to your application from outside the cluster without directly exposing node ports.

The Ingress toggle is available for Cluster IP and Node Port service types. It is not available for the Load Balancer service type, as Load Balancer already provisions a public endpoint through the cloud provider.

-

Resource Allocation

In this section, you need to define the minimum and maximum resource allocations for your containers. This ensures that your applications have the necessary resources to function correctly while also preventing them from consuming excessive resources that could impact other applications in the cluster.

- Requests

In Requests, you specify the minimum amount of CPU and memory resources that a container requires. The Kubernetes scheduler uses these values to make informed decisions about where to place containers within the cluster. Requests help ensure that containers have the resources they need to start and operate smoothly under typical conditions.Memory (in Megabytes)

Specify the amount of memory the application is guaranteed. Enter a numeric value greater than or equal to 128. Specifying memory request ensures that your application has enough memory to run smoothly. This helps the Kubernetes scheduler allocate resources efficiently.CPU (in Millicores)

Specify the amount of CPU the application is guaranteed. Enter a numeric value greater than or equal to 50. Specifying CPU request ensures that your application has enough processing power. This helps prevent performance issues by reserving necessary CPU resources.

- Limits

In Limits, you define the maximum amount of CPU and memory resources that a container can use. Limits protect the cluster from resource exhaustion by capping the amount of resources any single container can consume. This prevents containers from monopolizing resources, which could negatively impact the performance and stability of other applications in the cluster.Memory (in Megabytes)

Specify the maximum amount of memory your application can use. Enter a numeric value greater than or equal to 128. Defining a memory limit prevents your application from using more memory than allocated, which could otherwise lead to resource exhaustion and affect other applications running in the cluster.CPU (in Millicores)

Specify the maximum amount of CPU your application can use. Enter a numeric value greater than or equal to 50. Defining the CPU limit prevents your application from consuming more CPU resources than allocated, ensuring fair resource distribution among all applications in the cluster.

- Requests

- Trigger Types

The CD trigger determines when the deployment pipeline will run to push your application to the Kubernetes cluster. Depending on your chosen trigger type, deployments can run automatically after CI, on a fixed schedule, or on demand.

-

Manual(default and mandatory)

Executes deployment only when you manually trigger it from the Calibo Accelerate interface. Always selected by default for the first deployment. This ensures that the platform captures the necessary environment details, validates access to the Kubernetes cluster, and confirms deployment prerequisites before enabling automation.

When to Use

-

Required for the first deployment of any technology from the platform.

-

Production environments requiring controlled, approved releases.

-

Cases where deployments must align with business timelines or maintenance windows.

-

Scenarios where manual QA or compliance review is required before release.

Example

A Java microservice that is deployed to production only after security and compliance teams approve the release.

-

-

After CI

Automatically starts deployment immediately after the CI pipeline completes successfully. No manual action is required between build completion and deployment.

When to Use

-

Agile environments where code changes should be deployed as soon as they pass the build and test stages.

-

Lower environments (dev, qa) for continuous delivery and rapid feedback.

-

Automated validation workflows where every successful build should be deployed for verification.

Example

A React UI project deployed to a QA namespace as soon as the latest commit passes CI checks.

-

-

Cron Schedule

Runs deployment automatically at predefined intervals (for example, daily or weekly). Builds may occur more frequently, but deployments happen only on the set schedule.

In each schedule, you can specify the following:

-

Time Zone: Select the desired time zone for the CD trigger schedule.

-

Frequency : Select the Every Day or Every Week option as required.

-

Time: Define when to trigger the pipeline (in hours and minutes). You can define multiple schedules as well.

-

4. Functional Testing

4. Functional Testing

Enable the Functional Testing toggle to run automated test cases immediately after deployment. Configure the following fields:

-

Testing Tool – Select from configured testing tools (for example, Selenium).

-

Test Case Repository – Repository URL containing test scripts.

-

Branch – Branch of the test case repository.

-

Command – CLI command to execute the test cases.

-

-

Click Add to save the details. The technology is added for deployment to your selected Kubernetes cluster.

Deploy Technologies to the Kubernetes Cluster

Depending on the CI/CD tool selected in your deployment stage configuration, some steps in the technology deployment flow vary. The following sections describe the steps separately for Jenkins and GitHub Actions.

After a technology is added for deployment to your selected Kubernetes cluster, do the following:

1. Run the CI Pipeline

-

On the technology card, click the ellipsis (...).

-

Click Run CI Pipeline. After the CI pipeline starts, the CI status changes to CI pipeline in progress.

-

Click Refresh in the top right to update the status.

-

After the pipeline completes, the CI status changes to CI pipeline success.

-

To check real-time progress of your CI pipeline,

Go to the CI/CD Pipeline tab.

In the Continuous Integration (CI) pipeline for the technology being deployed, review each step:

-

Checkout SCM – Pulls source code from the configured repository.

-

Initialization – Prepares environment and sets up dependencies.

-

Build – Compiles and packages the application.

-

Unit Tests – Runs automated tests to validate code quality.

-

SonarQube Scan – Performs static code analysis for code quality and security.

-

Build Container Image – Creates a Docker image of the application.

-

Publish Container Image – Pushes the built image to the configured container registry.

-

2. Deploy the Technology

After CI is successful, you can deploy the technology to the cluster.

-

On the technology card, click the ellipsis (...).

-

Click Deploy.

-

In the Deploy side drawer:

-

In the Container Image Tag list, choose the tag you want to deploy (for example, latest or a version number).

-

Click Deploy.

CD status changes to Pipeline build is in progress.

-

- Click Refresh in the top right to update the status. When the deployment is complete, CD status changes to Deployed.

3. View Deployment Details

-

Click View Details on the technology card to check the following:

-

Basic Details

-

Context path from deployment configuration

-

Cluster name

-

Links to:

-

CI Pipeline (Jenkins job run)

-

CD Pipeline (Jenkins deployment run)

-

Artifactory (JFrog or ECR image repository)

-

-

Live Application – Click Browse to open the deployed app.

-

Log Details – View pod logs, readiness, status, restarts, and age.

-

-

Continuous Integration Tab

-

Displays the selected Source Code Branch

-

Shows the Pipeline Trigger (Manual, Code Change, or Schedule)

-

-

Continuous Deployment Tab

Shows the following details configured in the deployment configuration:

-

Namespace

-

Replicas

-

Networking – Port Number, Service Type, Ingress Status

-

Resources – CPU and memory requests and limits

-

Pipeline Trigger

-

-

-

To check real-time progress of your CD pipeline, go to the CI/CD Pipeline tab. The following stages are displayed:

-

Declarative: Checkout SCM – Retrieves the latest source code from the repository.

-

Initialization – Prepares the deployment environment and required settings.

-

Deploy – Deploys the application to the target Kubernetes cluster.

-

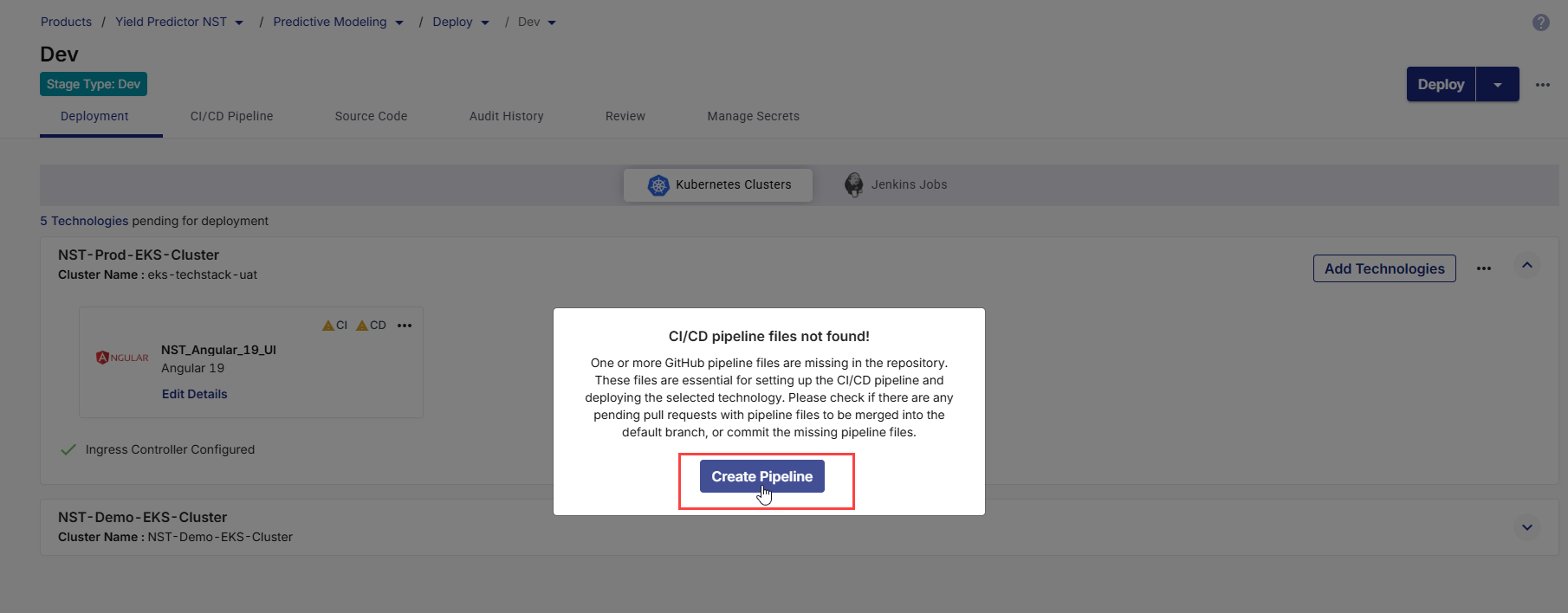

1. Missing YAML Workflow Files Are Identified

Calibo Accelerate automatically verifies the presence of the required workflow files in your source code repository as soon as you add a technology for deployment. These YAML workflow files define the CI/CD pipeline steps that allow the platform to build, test, and deploy your application. Without them, the platform cannot execute GitHub Actions workflows on your behalf.

If one or more required pipeline YAML files are not found in the repository, the platform displays the following message:

Click Create Pipeline.

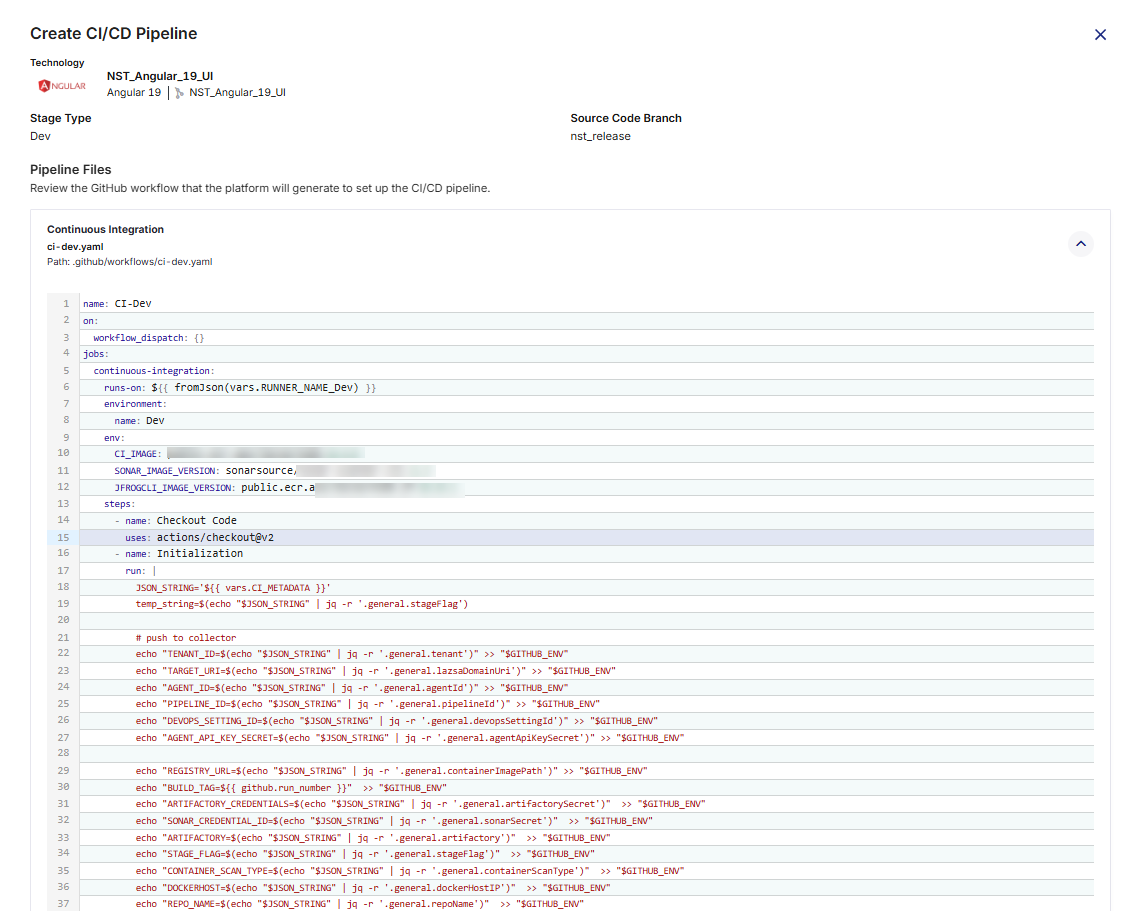

2. Review Pipeline Files

On the Create CI/CD Pipeline screen, the following required pipeline files are listed:

-

Continuous Integration (

ci-dev.yaml) – Handles building the application, running unit tests, performing static code analysis (SonarQube), and preparing artifacts. -

Continuous Deployment (

deploy-to-k8s-dev.yaml) – Handles Kubernetes deployments to the Kubernetes cluster, supporting deploy, promote, rollback, and destroy actions, along with optional functional testing and artifact promotion.

Click each file’s expand icon ![]() to preview its full YAML content.

to preview its full YAML content.

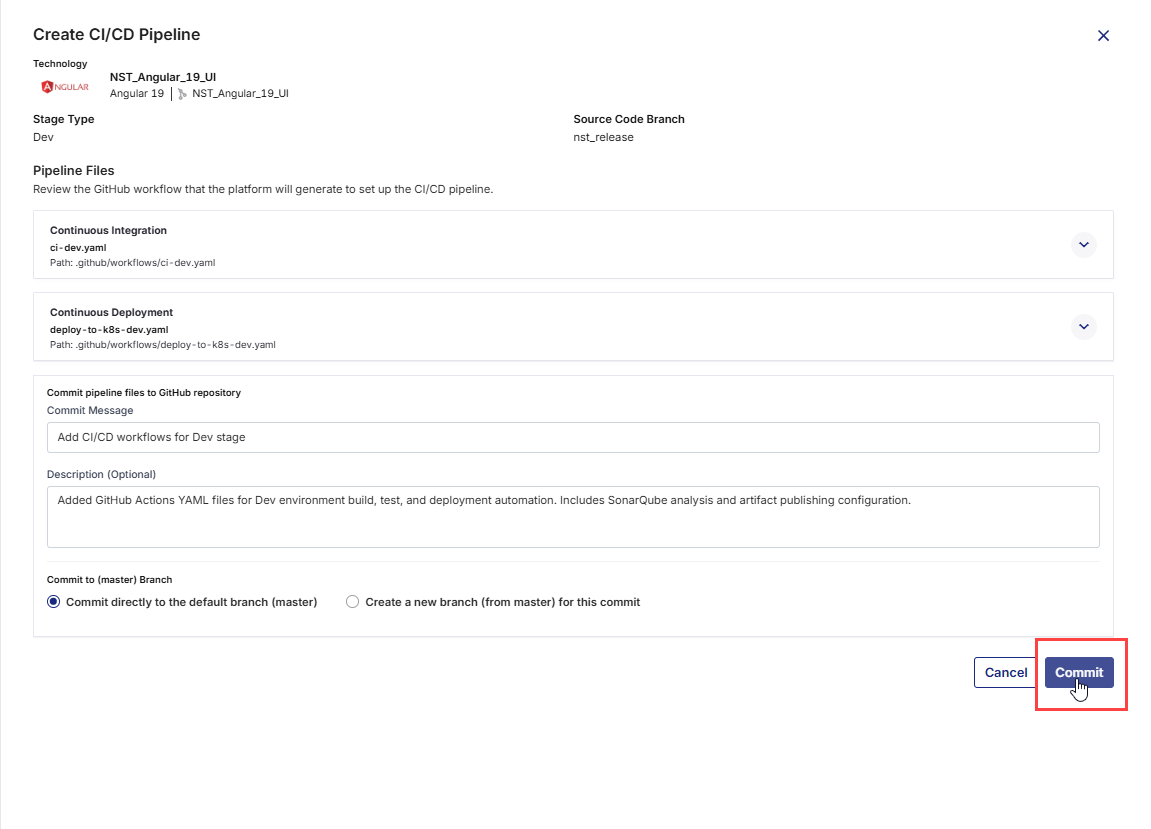

3. Commit the Workflow Files to GitHub Repository

Once you have reviewed the CI/CD workflow files (for both Continuous Integration and Continuous Deployment), you must commit them to your GitHub repository so that they can be executed by GitHub Actions. Committing these files will place them under the .github/workflows/ directory in your repository, making them available for the platform to trigger pipeline runs.

-

Commit Message: A short, clear summary of the change you are committing. For example:

Add CI/CD workflows for Dev stage. -

Description (Optional): Additional context for your team, especially if the commit involves multiple steps or dependencies. Useful for complex changes or when multiple stakeholders are reviewing the commit. For example:

Added GitHub Actions YAML files for Dev environment build, test, and deployment automation. Includes SonarQube analysis and artifact publishing configuration. -

Branch Selection:

You can choose where to commit the workflow files in the GitHub repository.

-

Commit directly to the default branch: The workflows become active immediately in your repository. Choose this option for urgent changes or when you are confident the workflows are ready for production use.

-

Create a new branch: Commits the files to a separate branch, allowing review and testing before merging into the default branch. This is useful for collaborative environments where changes need to be peer-reviewed.

After filling in the fields, click Commit to save the workflow files.

These files will then be available in your repository’s

.github/workflows/folder, and GitHub Actions will start using them according to the triggers defined in the YAML files. -

4. Run the CI Pipeline

-

On the technology card, click the ellipsis (...).

-

Click Run CI Pipeline. After the CI pipeline starts, the CI status changes to CI pipeline in progress.

-

Click Refresh in the top right to update the status.

-

After the pipeline completes, the CI status changes to CI pipeline success.

-

To check real-time progress of your CI pipeline,

Go to the CI/CD Pipeline tab.

In the Continuous Integration (CI) pipeline for the technology being deployed, review each step:

-

Set Up Job – Initializes the environment.

-

Checkout Code – Pulls the source code from GitHub.

-

Initialization – Sets environment variables and prepares build context.

-

Build – Compiles and prepares the application.

-

Unit Tests – Runs automated tests.

-

Build Container Image – Creates Docker image for deployment.

-

Publish Container Image – Pushes image to the configured registry (JFrog/ECR).

-

Push to Collector – Sends build metadata to Calibo.

-

Post Checkout Code and Complete Job – Final cleanup and completion.

-

5. Deploy the Technology

After CI is successful, you can deploy the technology to the cluster.

-

On the technology card, click the ellipsis (...).

-

Click Deploy.

-

In the Deploy side drawer:

-

Select Container Image Tag. Choose the tag you want to deploy (for example, latest or a version number).

-

Click Deploy.

CD status changes to Pipeline build is in progress.

-

- Click Refresh in the top right to update the status. When the deployment is complete, CD status changes to Deployed.

6. View Deployment Details

-

Click View Details on the technology card to check the following:

-

Basic Details

-

Context path from deployment configuration

-

Cluster name

-

Links to:

-

CI Pipeline (GitHub Actions workflow run)

-

CD Pipeline (GitHub Actions deployment run)

-

Artifactory (JFrog or ECR image repository)

-

-

Live Application – Click Browse to open the deployed app.

-

Log Details – View pod logs, readiness, status, restarts, and age.

-

-

-

Continuous Integration Tab

-

Displays the selected Source Code Branch

-

Shows the Pipeline Trigger (Manual, After Push, or Cron Schedule)

-

-

Continuous Deployment Tab

Shows the following details configured in the deployment configuration:

-

Namespace

-

Replicas

-

Networking – Port Number, Service Type, Ingress Status

-

Resources – CPU and memory requests and limits

-

Pipeline Trigger

-

-

-

To check real-time progress of your CI pipeline, go to the CI/CD Pipeline tab. The following stages are displayed:

-

Set Up Job – Prepares the runner environment.

-

Checkout Code – Pulls repository code for deployment.

-

Initialization – Installs tools and dependencies.

-

Configure Kubeconfig File – Sets up Kubernetes cluster access.

-

Deploy to Kubernetes – Applies configurations to the cluster.

-

Push to Collector – Sends deployment data to Calibo Accelerate.

-

Post Checkout Code – Cleans up repository workspace.

-

Complete Job – Marks the workflow as finished.

-

-

The first run must be manual so that Calibo Accelerate can fetch necessary metadata and initialize tracking for the pipeline.

-

After the initial run, subsequent executions can be triggered automatically based on your pipeline trigger configuration.

-

Always monitor pipeline logs in both Calibo Accelerate and GitHub for troubleshooting build or deployment issues.

Uninstall a Technology

When a technology is no longer required in your product or pipeline, you can uninstall it from Calibo Accelerate. Uninstalling a technology removes its configuration and also deletes the associated Continuous Integration (CI) and Continuous Deployment (CD) jobs from the configured CI/CD tool (Jenkins or GitHub Actions).

This ensures your product remains clean and that no unnecessary jobs are executed in the background.

To uninstall a technology, do the following:

-

On the technology card, click the ellipsis (...) menu in the upper-right corner.

-

From the dropdown menu, select Uninstall.

-

A confirmation dialog box appears.

-

Click Uninstall to proceed.

-

Click Cancel if you want to retain the technology.

-

-

Once confirmed:

-

The selected technology is removed from Calibo Accelerate.

-

Related CI and CD jobs are deleted automatically from the connected CI/CD tool (Jenkins or GitHub Actions).

-

Edit Technology Details

After a technology is added to your product, you may need to update its deployment configurations over time. For example, you might want to adjust the context path, update CI/CD settings, or modify functional testing parameters.

To edit technology details, do the following:

-

On the technology card, click the ellipsis (...) menu in the upper-right corner.

-

From the dropdown menu, select Edit Details. This opens the deployment configuration details for the selected technology.

-

Modify the desired settings.

-

Save your changes.

With the updated configuration in place, you can now trigger the CI pipeline and deploy the technology to the selected environment.

|

What's next? Data Pipeline Studio |