Prioritization and Approval

The Use Case Prioritization and Approval phase is a critical decision-making checkpoint that determines which enriched use cases move forward into implementation. It converts a curated list of enriched use cases into a ranked and approved execution roadmap. It ensures alignment across business, technical, and governance stakeholders by evaluating feasibility, value, and readiness using standardized scoring frameworks.

This is where organizational aspirations become tangible—translating possibilities into approved projects with clear timelines, committed resources, and leadership buy-in.

At this stage, DBIM recommends that stakeholders apply structured prioritization techniques such as ICE (Impact–Confidence–Ease) or the Weighted Scoring Model (WSM) to assess each use case objectively and consistently. The outcome is a high-confidence, resource-aligned roadmap that supports effective portfolio planning and agile delivery execution. The team aligns on top priorities and completes the management phase of the DBIM methodology—ready to move into development with clear timelines, expectations, and delivery plans.

|

Goals |

Outcome |

|---|---|

|

|

Proven Prioritization Techniques: ICE and WSM

To bring objectivity to this decision-making phase, DBIM recommends applying one of the following frameworks:

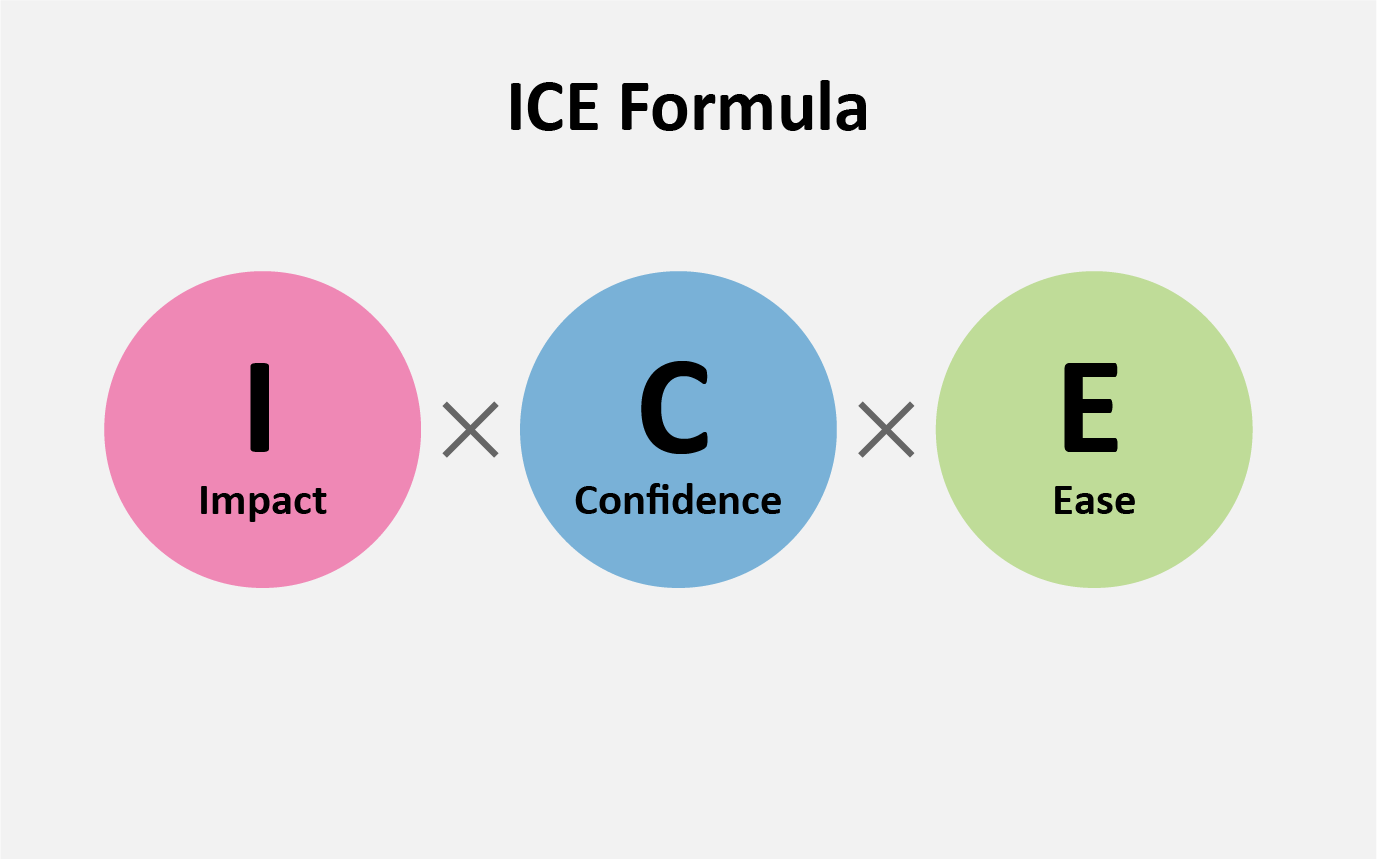

The ICE scoring model is a quick and intuitive prioritization technique used to evaluate ideas based on three factors: Impact, Confidence, and Ease of implementation.

Each factor is scored on a scale from 1 to 10, and the formula (Impact × Confidence × Ease) produces a composite score.

|

Factor |

Description |

Scale |

|---|---|---|

|

Impact |

Potential business value |

1–10 |

|

Confidence |

How sure are we of success? |

1–10 |

|

Ease |

Simplicity of execution (low effort = high ease) |

1–10 |

This method is especially useful in early-stage evaluations where speed and simplicity are key. ICE helps teams rapidly surface high-potential use cases with minimal effort. It’s ideal for hackathons, MVP selection, or shortlisting from a large pool of options.

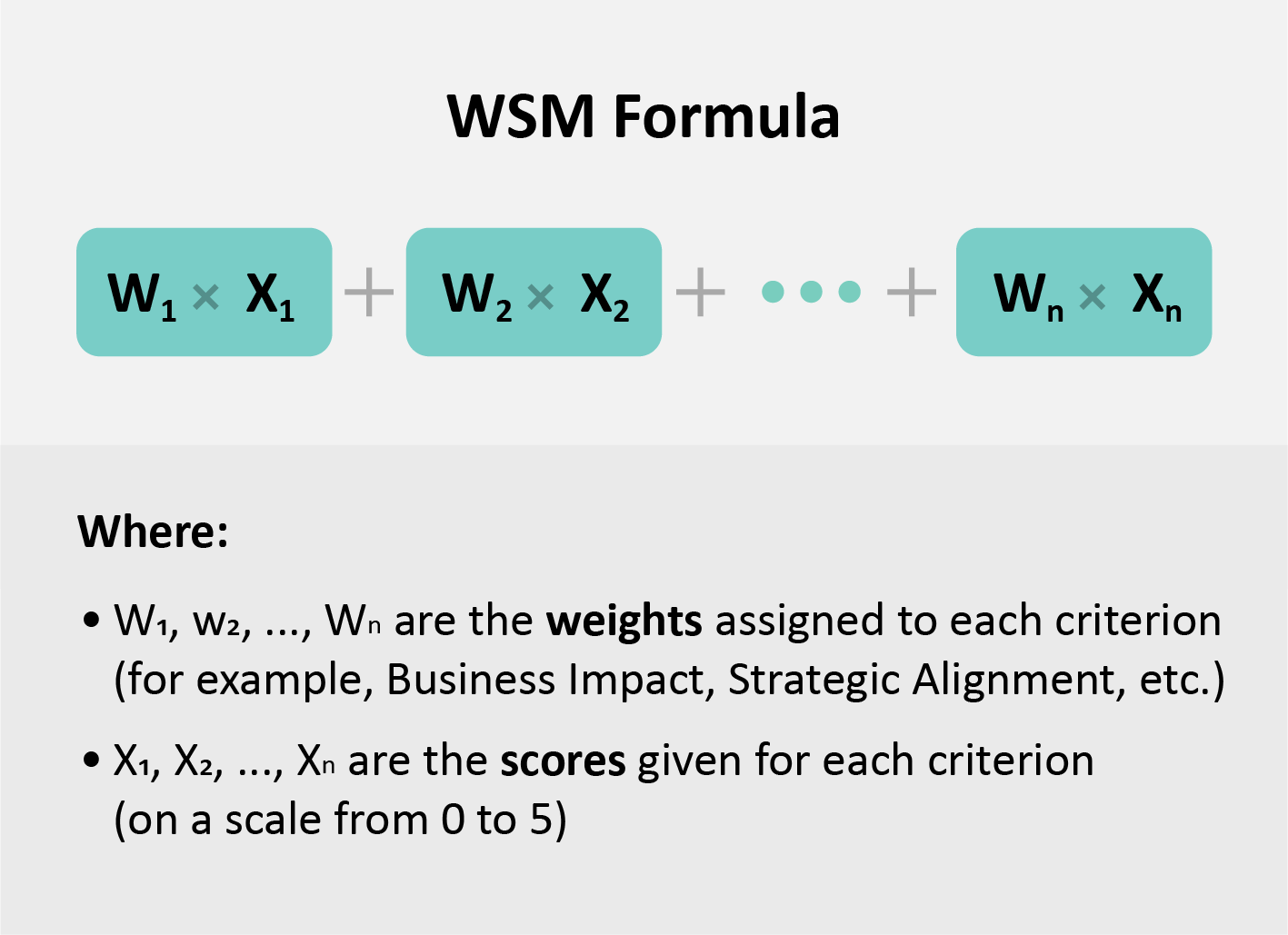

As compared to the ICE scoring, WSM is a structured and more detailed scoring system that ranks use cases based on multiple business and technical criteria, each with an assigned weight. Stakeholders score each use case (typically on a scale of 1 to 5) against each criterion (for example, business impact, tech readiness, customer value), and the weighted total determines its rank.

WSM promotes cross-functional consensus and forces trade-off conversations that surface strategic priorities. It is well-suited for organizations with complex portfolios and competing demands. Unlike ICE, WSM offers a nuanced, transparent, and traceable prioritization process.

Choosing between ICE and WSM depends on the context, time available, and depth of analysis needed. While ICE is a fast and intuitive method to prioritize at a high level, WSM offers a more detailed, criteria-based approach ideal for strategic decision-making. You don’t always have to pick one—many teams use ICE for initial screening and WSM for final approval. The goal is to move forward with high-confidence, stakeholder-aligned decisions.

|

Use Scenario |

Recommendation |

|---|---|

|

You need a quick, high-level comparison across many use cases. |

Use ICE |

|

You need to evaluate across multiple strategic criteria or compare nuanced business trade-offs. |

Use WSM |

|

You want to combine both for a balanced approach. |

Use both—ICE for initial filtering, WSM for deeper evaluation |

Calibo’s Automated Prioritization Template

PRO TIP

This is a collaborative, strategic activity—conduct a prioritization workshop with product owners, architects, engineering leads, and other stakeholders to discuss each use case and finalize scores with consensus

To help you streamline the use case prioritization process, Calibo provides an Automated Prioritization Template that allows teams to score and rank use cases using both ICE and WSM models.

-

For ICE: Enter scores (1–10) for Impact, Confidence, and Ease.

-

For WSM: Enter scores (1–5) across criteria. The template auto-calculates the weighted score.

|

Use Case Title |

MoSCoW |

Impact (1–10) |

Confidence (1–10) |

Ease (1-10) |

ICE Score (I x C x E) |

Priority |

|---|---|---|---|---|---|---|

|

[Use Case A] |

Must-Have |

8 |

9 |

7 |

504 |

1 |

|

[Use Case B] |

Should-Have |

7 |

7 |

5 |

245 |

3 |

|

[Use Case C] |

Must-Have |

9 |

8 |

6 |

432 |

2 |

How to Use the ICE Template in Practice

ICE is especially effective early in prioritization when you need a high-level view of what to move forward quickly, what to re-evaluate, and what to drop.

-

Collaborative Scoring: Bring together stakeholders—product owners, architects, engineering leads—in a prioritization workshop. Discuss each use case in context and score collaboratively to reflect a shared perspective.

-

Be Honest and Realistic: Avoid the temptation to inflate scores. A moderate but accurate ICE score is more useful than a falsely high one.

-

Normalize Across Use Cases: If multiple teams are scoring different use cases, ensure scoring is normalized across the board using consistent rubric or shared facilitation.

-

Use the Calibo ICE Template: Simply input your scores (1–10 for each factor) into the ICE scoring columns in the template. The sheet will automatically calculate ICE scores and rank use cases by priority.

In this template, we have considered the following commonly used WSM criteria with example weights out of 100. You may revise these weights based on stakeholder consensus or portfolio-level priorities (for example, if your organization is data-first, you might assign more weight to Data Availability or Technology Readiness).

|

Criterion |

Weight (%) |

Description |

|---|---|---|

|

Business Impact (BI) |

25 |

Potential to drive revenue, reduce cost, or increase efficiency |

|

Strategic Alignment (SA) |

20 |

Fit with organizational goals, strategic priorities, and key initiatives |

|

Technology Readiness (TR) |

15 |

Maturity and availability of technologies required |

|

Data Availability (DA) |

10 |

Availability and quality of data needed for execution |

|

Customer Experience (CX) |

10 |

Ability to improve customer satisfaction, engagement, or retention |

|

Ease of Implementation (EOI) |

10 |

Relative effort, complexity, and resourcing required |

|

Cost Efficiency (CE) |

10 |

Cost-effectiveness relative to potential value and ROI |

|

Total |

100 |

Represents the sum of all individual criteria weights, ensuring a balanced evaluation framework. |

In Calibo’s Automated Prioritization Template, you only need to score each use case against each criterion on a 0–5 scale, where:

|

Score |

Interpretation |

|---|---|

|

0 |

Does not meet the criterion at all / Not applicable |

|

1 |

Very poor alignment or contribution to the criterion |

|

2 |

Limited alignment; some relevance, but weak or underdeveloped |

|

3 |

Moderate alignment; acceptable but not exceptional |

|

4 |

Strong alignment; meets the criterion effectively |

|

5 |

Excellent alignment; fully satisfies the criterion with high strategic value |

The template automatically calculates the WSM score using the predefined weightage and the scores you provide.

|

Use Case Title |

BI (25%) |

SA (20%) |

TR (15%) |

DA (10%) |

CX (10%) |

EOI (10%) |

CE (10%) |

WSM Score |

|---|---|---|---|---|---|---|---|---|

|

[Use Case A] |

4 |

5 |

4 |

3 |

5 |

4 |

5 |

430 |

|

[Use Case B] |

5 |

4 |

5 |

5 |

4 |

3 |

4 |

450 |

|

[Use Case C] |

3 |

3 |

2 |

2 |

3 |

2 |

3 |

270 |

|

[Use Case D] |

2 |

4 |

3 |

3 |

2 |

3 |

4 |

310 |

|

Your Use Case Here |

x |

X |

x |

x |

x |

x |

x |

(auto) |

How to Use the WSM Template in Practice

-

Customize the criteria and weights based on what matters most to your organization.

-

In your Prioritization Meeting, gather stakeholders (product, engineering, architecture, governance).

-

Review each enriched use case, and score each criterion using a 0–5 scale.

-

Discuss differences in scoring to ensure consensus and shared understanding.

-

Let the template auto-calculate the WSM score for each use case.

-

Sort use cases by final WSM score to finalize your delivery roadmap.

Checklist: Prioritization and Approval Readiness

The prioritization and approval checklist ensures that only high-value enriched use cases move forward to the design and development stages. It confirms that Scoring models have been applied (e.g., ICE or WSM) to evaluate each enriched use case. Use cases are ranked according to their final priority score, and Status is updated to "Approved" ensuring stakeholder readiness for downstream execution.

|

Sl. No. |

Item |

Status (Y/N/NA) |

Comments |

|---|---|---|---|

|

1 |

Scoring models (ICE and/or WSM) applied to all enriched use cases |

Y |

|

|

2 |

Use cases ranked based on final scores |

Y |

|

|

3 |

Stakeholders reviewed and validated prioritization outcomes |

Y |

|

|

4 |

Status updated to “Approved” |

Y |

|

|

5 |

Roadmap planning initiated for top use cases |

Y |

|

Note:

This checklist is a suggested template. You can customize it based on your governance model and prioritization framework.

PRO TIP:

Always sanity-check your top priorities against business timelines, resource capacity, and quick wins. Balance value, urgency, and feasibility to build a roadmap that's both ambitious and achievable.

Advance Bank Prioritizing Enriched Use Cases

After enriching nine high-potential use cases, the leadership team at Advance Bank—Joseph George (Portfolio Owner), Martha Grace (CPO), and Priya Sharma (Product Owner)—convened to identify which ones should advance to development.

They adopted a structured approach using both ICE and WSM scoring techniques to rank the use cases by value, feasibility, and effort.

Using the ICE scoring framework, the team collaboratively scored each use case for Impact, Confidence, and Ease of implementation.

|

Use Case Title |

MoSCoW |

Use Case Size |

Impact (1–10) |

Confidence (1–10) |

Ease (1–10) |

ICE Score |

Rank |

|---|---|---|---|---|---|---|---|

|

Credit Scoring Engine Using Alternative Data |

Must-Have |

Large |

8 |

8 |

7 |

448 |

1 |

|

Real-Time Fraud Detection Using Behavioral Signals |

Must-Have |

Complex |

9 |

8 |

6 |

432 |

2 |

|

Social Media Sentiment Trends |

Could-Have |

Medium |

7 |

7 |

7 |

343 |

4 |

|

Early Warning System for High-Risk Borrowers |

Must-Have |

Large |

9 |

7 |

6 |

378 |

3 |

|

Audit Trail for CI/CD Deployments |

Should-Have |

Medium |

6 |

7 |

6 |

252 |

7 |

|

AML (Anti-Money Laundering) |

Must-Have |

Complex |

8 |

7 |

6 |

336 |

5 |

|

Sentiment Analysis of Customer Reviews |

Must-Have |

Large |

8 |

8 |

5 |

320 |

6 |

|

Robo-Advisors (Financial Planning) |

Should-Have |

Medium |

6 |

6 |

6 |

216 |

8 |

Despite ranking #6 by ICE alone, the Sentiment Analysis use case was identified as a must-have with high value but slightly lower ease of implementation, which affected its ICE rank.

To account for broader strategic considerations, the team used the Weighted Scoring Model (WSM).

|

Use Case Title |

BI |

SA |

TR |

DA |

CX |

EOI |

CE |

WSM Score |

Rank |

|---|---|---|---|---|---|---|---|---|---|

|

Real-Time Fraud Detection Using Behavioral Signals |

5 |

5 |

5 |

5 |

5 |

5 |

5 |

500 |

1 |

|

Sentiment Analysis of Customer Reviews |

5 |

5 |

5 |

5 |

5 |

5 |

4 |

480 |

2 |

|

Credit Scoring Engine Using Alternative Data |

4 |

4 |

4 |

4 |

4 |

4 |

4 |

400 |

3 |

|

AML (Anti-Money Laundering) |

3 |

3 |

2 |

2 |

3 |

2 |

2 |

255 |

4 |

|

Automated KYC |

3 |

3 |

1 |

1 |

2 |

1 |

3 |

220 |

6 |

|

Market Sentiment Analysis |

2 |

2 |

2 |

2 |

1 |

2 |

2 |

190 |

7 |

|

Robo-Advisors |

2 |

2 |

1 |

2 |

1 |

1 |

1 |

170 |

8 |

|

Smart Portfolio Management |

2 |

2 |

1 |

2 |

2 |

1 |

1 |

165 |

9 |

Key Insight

While the ICE method initially placed the Sentiment Analysis of Customer Product Reviews lower due to implementation effort, the WSM model captured its strong strategic alignment, high business impact, and customer experience value—elevating it to #2 overall.

The team agreed: This use case combines strategic value with proven feasibility and was therefore selected as Advance Bank’s top-priority MVP for development.

|

What's next? Use Case Development Orchestration |