Using Glue Data Catalog as Metastore for Databricks in Cross-Account Setup

Before you read the configuration mentioned in this topic, we recommend you read the configuration steps mentioned in the following topics:

Let us take an example to understand how you can use AWS Glue Data Catalog as a metastore with Databricks and S3 located across AWS accounts.

Prerequisites

-

AWS Admin Access

You must have AWS administrator access to IAM roles and policies in the AWS accounts from where you want to access Databricks, S3, and Glue Data Catalog. -

Working Databricks Subscription

You must have an existing Databricks workspace.

Assumption

To explain the cross-account scenario, we assume the following:

-

Databricks and AWS Glue Data Catalog are in different AWS accounts.

-

Databricks and S3 can be in the same AWS account or different AWS accounts depending on your requirements.

-

You have already created the IAM roles to allow Databricks to access S3, ensuring Databricks has the necessary permissions for seamless data access and storage.

Configure Glue Data Catalog as the Metastore in Databricks

To configure Databricks cluster policy settings for using the AWS Glue Metastore in Databricks:

-

In your Databricks workspace, go to the Clusters section.

-

Select the cluster where you want to enable the Glue Metastore.

-

Under Advanced Options, find the Spark tab.

-

Scroll down to the Metastore section and add the following settings:

Copyspark.hadoop.hive.metastore.glue.catalogid <aws-account-id-for-glue-catalog>

spark.hadoop.aws.region <aws-region-for-glue-catalog>These two settings are used to configure Databricks to connect to an AWS Glue Data Catalog located in a different AWS account or region:

-

spark.hadoop.hive.metastore.glue.catalogid <aws-account-id-for-glue-catalog>

This setting specifies the AWS account ID where the Glue Data Catalog is located. By setting this, Databricks knows which account’s Glue Data Catalog to connect to, enabling cross-account access to the metadata stored in Glue.

-

spark.hadoop.aws.region <aws-region-for-glue-catalog>

This setting specifies the AWS region where the Glue Data Catalog resides. It ensures that Databricks connects to the correct AWS region to retrieve metadata from Glue, which is necessary if the Glue catalog is in a different region than Databricks.

-

Create Cross-Account IAM Roles for Databricks to Access AWS Glue Data Catalog

To allow Databricks to access AWS Glue Data Catalog, you must create an IAM role to manage access securely. For tools installed in different AWS accounts, you need to create a cross-account IAM role. This involves creating a role in the AWS account containing Glue Data Catalog (Let's call it Account A) and allowing the AWS account where Databricks is deployed (Let's call it Account B) to assume that role. By defining specific permissions (listed below) and using AWS Security Token Service (STS), the role enables secure cross-account access to Glue Data Catalog.

To create a cross-account IAM role for Glue Data Catalog and Databricks

-

Create an IAM role in AWS account containing Glue Data Catalog (Account A)

-

In the AWS account from where Glue Data Catalog needs to be accessed (Account A), you must create an IAM role that grants the required permissions [for example, permission to retrieve the definition (metadata) of a specific database or a list of all databases or to create new tables within a specified database in AWS Glue Data Catalog].

-

Attach a permissions policy to the IAM role. This policy must define which actions are allowed or denied in AWS Glue Data Catalog. The policy must contain the following permissions:

-

" glue:GetDatabase",

-

" glue:CreateDatabase",

-

" glue:GetDatabases",

-

" glue:CreateTable",

-

" glue:GetTables",

-

" glue:GetTable",

Note:

If you use the CloudFormation Template (CFT) provided by Calibo to create an IAM role in the Glue Data Catalog account, the permissions policy is attached to the role automatically.

-

-

On the Trust relationships tab of this role, specify the Databricks IAM role ARN from account B as the trusted entity that can assume this role under specified conditions. In the

Conditionelement, provide the external ID that needs to be present in the role assumption request.Here is an example policy for your reference.

Copy{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<AWS_Account_B_ID>:role/<Role_Name>"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "<External_ID>"

}

}

}

]

}This AWS IAM policy allows the Databricks IAM role in another AWS account (Account B) to assume a role in the AWS account containing Glue Data Catalog (Account A) where this policy is applied. Replace the following placeholders in this policy with your actual values.

-

<AWS_Account_B_ID>: Replace this with the ID of the AWS account (Account B) where the Databricks IAM role exists.

-

<Role_Name>: The name of the role in account B that will be assuming this role.

-

<External_ID>: External ID that you have mentioned in the Glue Data Catalog connection details.

-

-

-

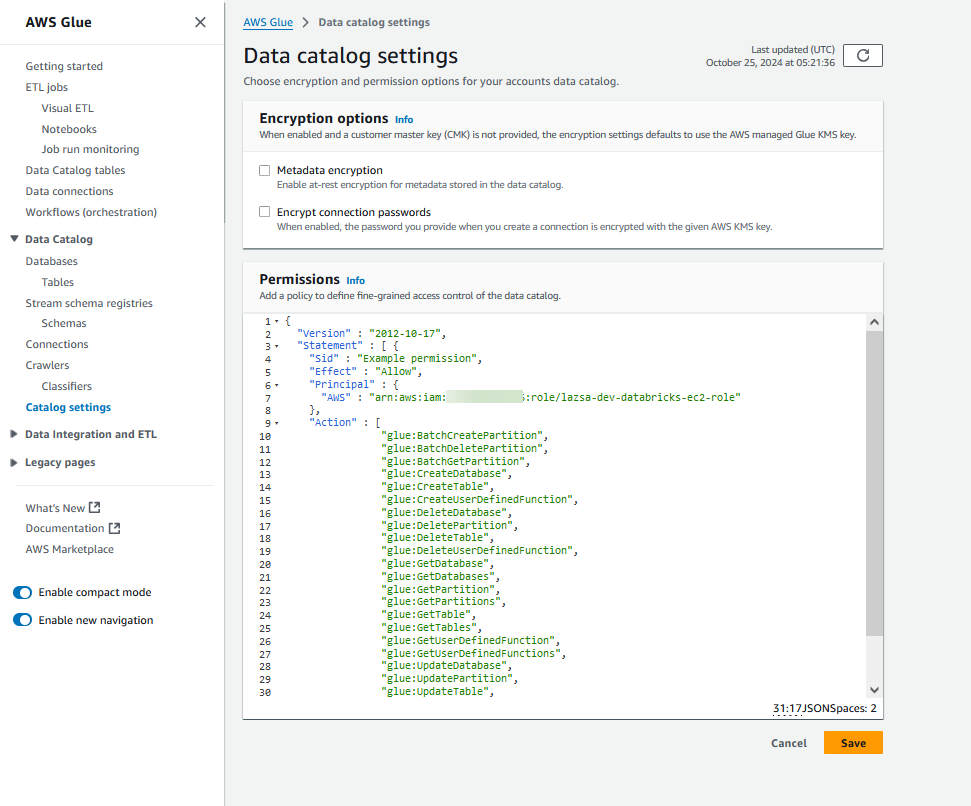

Attach a Resource Policy to Glue Data Catalog

-

Sign in to the AWS Management Console and open AWS Glue.

-

Go to Data Catalog > Catalog Settings.

-

In the Permissions editor, add the resource policy statement to define the permissions (what actions are allowed or denied) for AWS Glue Data Catalog. In this policy, specify the IAM role ARN created in the Databricks account (Account B) as a principal that is allowed to access the resource. Only one policy at a time can be attached to a Data Catalog.

-

The policy statement will look similar to the following. Replace the placeholders with actual values.

Copy{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Example permission",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<aws-account-id-databricks>:role/<iam-role-for-glue-access>"

},

"Action": [

"glue:BatchCreatePartition",

"glue:BatchDeletePartition",

"glue:BatchGetPartition",

"glue:CreateDatabase",

"glue:CreateTable",

"glue:CreateUserDefinedFunction",

"glue:DeleteDatabase",

"glue:DeletePartition",

"glue:DeleteTable",

"glue:DeleteUserDefinedFunction",

"glue:GetDatabase",

"glue:GetDatabases",

"glue:GetPartition",

"glue:GetPartitions",

"glue:GetTable",

"glue:GetTables",

"glue:GetUserDefinedFunction",

"glue:GetUserDefinedFunctions",

"glue:UpdateDatabase",

"glue:UpdatePartition",

"glue:UpdateTable",

"glue:UpdateUserDefinedFunction"

],

"Resource": "arn:aws:glue:<aws-region-target-glue-catalog>:<aws-account-id-target-glue-catalog>:*"

}

]

}

-

-

Configure Databricks to Assume the Role to Access Glue Data Catalog (Account B)

-

In AWS account B (where Databricks is deployed), configure Databricks to assume the role from AWS account A (using the IAM role ARN from AWS account A).

-

Attach the following policy to allow Databricks to assume the Glue Data Catalog role.

Copy{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:iam::<AWS_Account_A_ID>:role/<IAM Role for Glue Access>"

}

]

} -

Databricks will use the AWS Security Token Service (STS) to assume the role in AWS account A, temporarily obtaining credentials that grant it access to Glue Data Catalog.

-

You are now all set to use AWS Glue Data Catalog as a metastore in your data integration and data transformation jobs within your data pipeline.

| What's next? Databricks Templatized Data Integration Jobs |