Updating Cluster Libraries for Databricks

You may need to update the libraries used by a Databricks cluster in the following scenarios:

-

A vulnerability is reported for a specific library version that is currently being used by the cluster.

-

If you are using a Databricks cluster for running a job that needs a specific library version. (As far as possible, Calibo Accelerate recommends that you use a job cluster in this kind of a situation.)

To update libraries for a Databricks cluster

- Sign in to the Calibo Accelerate platform and click Configuration in the left navigation pane.

- On the Platform Setup screen, on the Cloud Platform, Tools & Technologies tile, click Configure.

- On the Cloud Platform, Tools & Technologies screen, in the Data Integration section, click Modify.

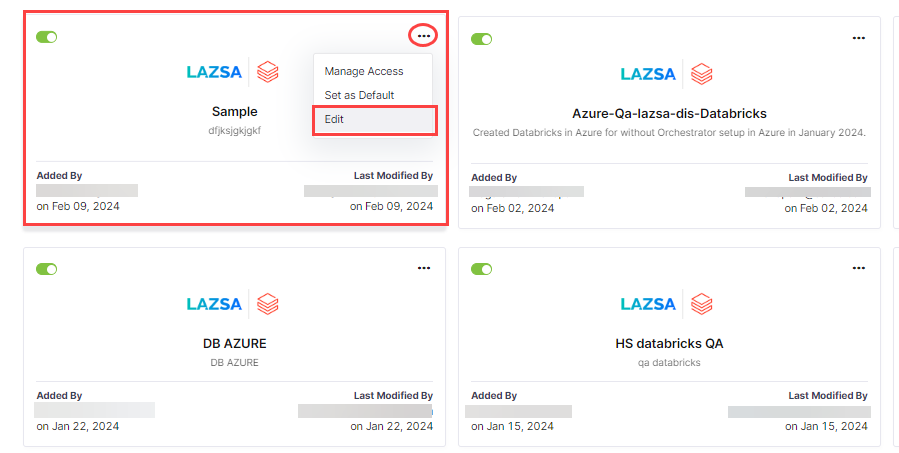

- Click the ellipsis (...) on the connection and click Edit.

-

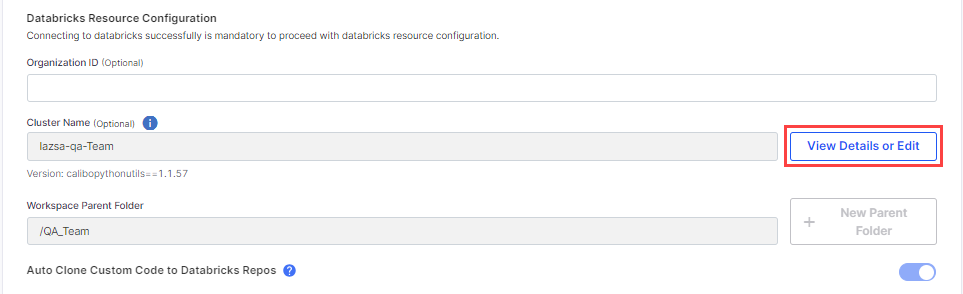

On the Databricks screen, click View Details or Edit.

-

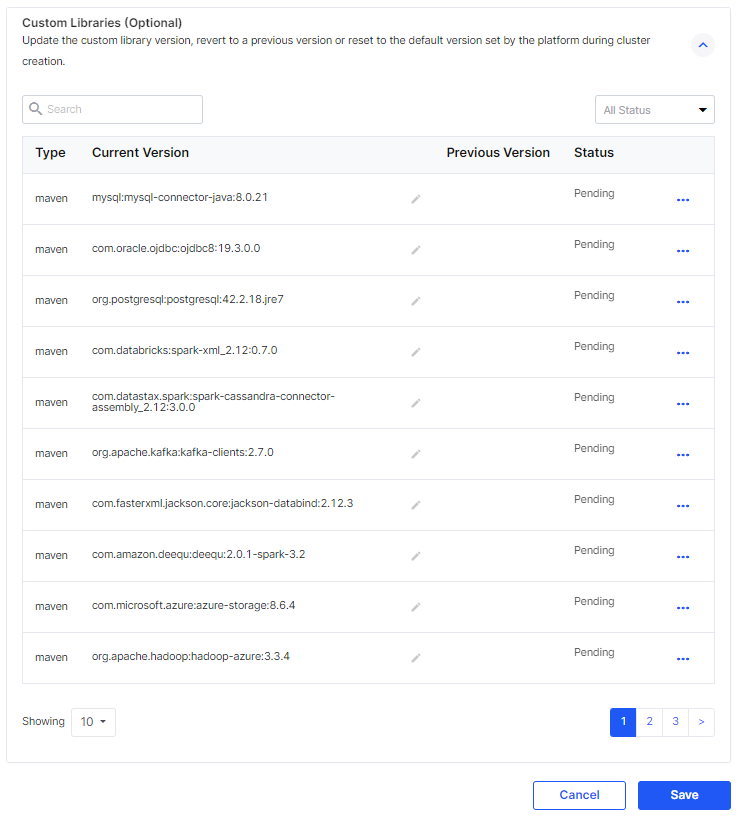

Click Custom Libraries and edit the version of the required libraries. Click Save.

Depending on the installation progress of the libraries, one of the following status is displayed in the Status column:

-

Pending

-

Installed

-

Failed

-

-

In case the library installation fails or for some other reason, you need to revert back to a previous library version or default version, you can do so in the following ways:

-

Click the ellipsis (...) and then click Reset to previous version.

-

Click the ellipsis (...) and then click Reset to default version.

-

| What's next? iDatabricks Custom Transformation Job |