Data Issue Resolver using Unity Catalog

In the Data Issue Resolver stage, you enhance the quality of data in various ways by handling duplicate data, handling missing data, outliers, specifying the partitioning order, handling case sensitivity, string operations and so on.

Prerequisites

You must complete the following prerequisites before creating a data issue resolver job:

-

The data quality nodes have specific requirements as far as the Databricks Runtime version of the cluster and access mode is concerned. Following are the requirements for Unity Catalog-enabled Databricks used as a data issue resolver node in the data pipeline:

Data Quality Node Databricks Cluster Runtime Version Access Mode Data Issue Resolver 14.3 LTS Dedicated/Standard

-

Access to a Databricks Unity Catalog node which will be used as a data lake in the data ingestion pipeline.

Creating a data issue resolver job

-

In the data quality stage, add a Data Issue Resolver node. Connect to and from the data lake.

-

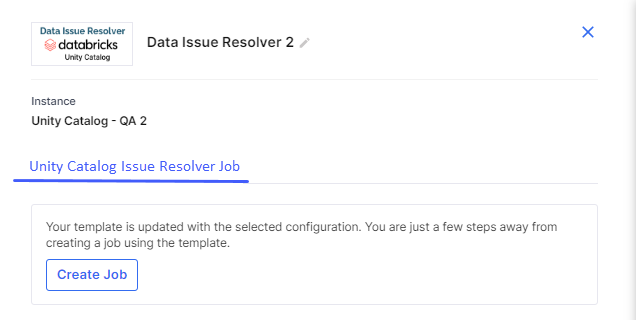

Click the issue resolver node and click Create Job to create an issue resolver job.

-

Complete the following steps to create the job:

Job Name

Job Name

Provide job details for the data issue resolver job:

-

Template - Based on the source and destination that you choose in the data pipeline, the template is automatically selected.

-

Job Name - Provide a name for the data issue resolver job.

-

Node rerun Attempts - Specify the number of times the pipeline rerun is attempted on this node of the pipeline, in case of failure. The default setting is done at the pipeline level. You can change the rerun attempts by selecting 1, 2, or 3.

Source

Source

-

Source - This is automatically selected depending on the data lake node configured and added in the pipeline.

-

Datastore - The configured datastore that you added in the data pipeline is displayed.

-

Catalog Name -The catalog which is associated with the configured datastore is displayed.

-

Schema Name - The schema associated with the catalog is displayed. The schema is selected based on the catalog, but you can select a different schema.

-

Source Table - Select a table from the selected datastore.

-

Data Processing Type - Select the type of processing that must be done for the data. Choose from the following options:

Delta

Delta

In this type of processing, incremental data is processed. For the first job run, the complete data is considered. For subsequent job runs, only delta data is considered.

-

Based On - The delta processing is done based on the following options:

Option Description Table Versions (Change Data Feed) -

Unity Catalog stores data in delta format, this feature is called Change Feed Data. Unity Catalog creates versions of tables when there is a change in data. Select this option if you are using the delta format.

-

Unique Identifier Columns - Select a column with unique records. This column is used to create versions, if multiple versions of the same record exist.

Table Columns -

Unity Catalog also supports tables like CSV, JSON, Parquet where data is stored in tables. Select this option if you are not using the delta format.

-

Delta Identification Column - In this type of processing, the complete dataset is considered for processing in each job run.

-

Table Versions - If you select this option, provide Unique Identifier Columns by selecting one or more columns the Delta table that uniquely identify each record and can be used as a reference to retrieve the latest version of the data.

-

Table Columns - If you select this option, provide Unique Identifier Columns by selecting a column with unique records. This column is used to create versions, if multiple versions of the same record exist.

-

Full

Full

In this type of processing, the complete dataset is considered for processing in each job run.

Data Issue Resolver Constraints

-

-

Column - Select a column on which you want to run the constraint.

-

Constraint - Select the constraint that you want to run on the data in the analyzer job from the following options:

Data Issue Resolver Constraints

Data Issue Resolver Constraints

Constraint Description Handle Duplicate Data Select a column with unique key for handling duplicate data. Partitioning Order Select ascending or descending order for partitioning. Handle Missing Data Select from the following options:

-

Remove Null/Empty - remove null or empty

-

Replace Null/Empty - Replace null or empty values with a provided value.

Handle Outliers Specify an integer value to handle outliers. Handle String Operation Perform a string operation from the following options:

-

Trim

-

LTrim

-

RTrim

-

LPad

-

RPad

-

Regex Replace

-

Sub-string

Handle Case Sensitivity Specify how to handle case sensitivity:

-

Upper Case

-

Lower Case

-

Proper Case

Replace Selective Data Replace data with the provided value:

-

Click Add.

-

Provide Existing Value and Replacement Value.

-

Click Add.

Handle Data Against Master Table For this constraint you can either enter a value manually or select values from master table.

-

Click Add.

-

Select one of the following options:

-

Enter value manually - Provide a value.

-

Select values from master table - Do the following:

-

Datastore - Select a Datastore.

-

Schema Name - select a schema.

-

Table - Select a table.

-

SQL Warehouse - Select a SQL warehouse.

-

Click Load Column - This populates the columns from the selected master table.

-

Select Column - select a column from the dropdown list. Sample column values are displayed.

-

-

-

-

Click Add.

-

After you have added the required constraints click Add to create a summary of the selected data processing options.

-

You can perform the following actions:

-

Click the constraints column to open the side drawer and review the added constraints,

-

Click the ellipsis (...) and edit or delete the constraints.

-

Click Next.

Target

Target

-

Target - The data lake that was configured for the target is auto-populated.

-

Datastore - The datastore that you configured for the Databricks Unity Catalog is auto-populated.

-

Catalog Name - The catalog name that is associated with the Unity Catalog instance is auto-populated.

-

Schema - The schema that is associated with the catalog is auto-populated. If required you can change the schema at this stage.

-

Map source data to target tables - Map the source file with a table in the target. You can either map an existing table or create a new table and map it.

Do one of the following:

-

Source - Select a table from the dropdown.

-

Target - If the schema of the target table matches the schema oof the source table, select a table from the dropdown. Else create a new table. Type a name for a new table and click Click to create "table name".

-

Click Map Table.

To delete a mapping click the ellipsis (...) and then click Delete.

-

Store Rejected Records - Enable this option to store the records that fail to meet the selected constraints.

-

Schema - Select the schema for the table in which the rejected records are stored.

-

Table - Provide a table name to store the rejected records.

-

Click Next.

Schema Mapping

Schema Mapping

In this step you define the schema of the target table by using various options provided in this stage:

-

Mapped Data - This provides the mapping of the source file with the target table that was done in the previous stage.

-

Filter columns from selected tables- You deselect columns as per your use case and run a constraint on each column.

-

Deselect columns that are not required, from the list of columns that is populated, and provide custom names to certain columns.

Note:

You must select at least one column.

-

Select a constraint from the options: Set Not Null and Check. For the check constraint you must provide a SQL condition that needs to be checked for the column.

-

-

Continue job run even if constraints are not met - This toggle when enabled, ensures that the job run is continued even if a constraint is not met.

-

Add Custom Columns - Enable this option to add additional columns apart from the existing columns of the table. To add custom columns, do the following:

Click Add Custom Column after providing the details for each column. Repeat the steps for the number of columns that you want to add.

-

Column Name - Provide a column name for the custom column that you want to add.

-

Type and Value - Select the parameter type for the new column. Choose from the following options:

-

Static Parameter - Provide a static value that is added for this column.

-

System Parameter - Select a system-generated parameter from the dropdown list that must be added to the custom column.

-

Generated - Provide the SQL code to combine two or more columns to generate the value of the new column.

-

-

Click Add Custom Column after adding the details for each column.

-

Repeat steps 1-3 for the number of columns that you want to add. After adding the required custom columns, click Add Column Mapping Details.

-

To review the column mapping details, click the ellipsis (...) and click View Details.

-

Click Next.

Data Management

Data Management

In this step you select the operation type that you want to perform on the source table and the partitioning that you want to create on the target table.

-

Mapped Data - Select the mapped source and target tables.

-

Operation Type - Select the operation type to perform on the source data. Choose one of the following options:

-

Append - Adds new data at the end of a file without erasing the existing content.

-

Merge - For the first job run, the data is added to the target table. For subsequent job runs, this operation type merges the data with the existing data when there is a change in the source data.

Unique Column Name - The merge operation is done based on the unique key column that you provide. Select a column name from the dropdown list.

-

Overwrite - Replaces the entire content of a file with new data.

-

-

Enable Partitioning - Enable this option if you want to use partitioning for the target data. Select from the following options:

-

Data Partition - Select column name from the dropdown list. Select a column from the dropdown list. Click Add.

-

Date Based Partition - Select the type from the options - yearly, monthly , or daily. Provide the prefix that you would like to add to the partition. Adding a prefix is optional.

Click Add. The Data mapping for mapped tables displays the mapping details. Click the ellipsis (...) to edit or delete the mapping.

-

Cluster Configuration

Cluster Configuration

You can select an all-purpose cluster or a job cluster to run the configured job. In case your Databricks cluster is not created through the Calibo Accelerate platform and you want to update custom environment variables, refer to the following:

Updating Custom Variables for a Databricks Cluster

All Purpose Clusters

All Purpose Clusters

Cluster - Select the all-purpose cluster that you want to use for the data integration job, from the dropdown list.

Job Cluster

Job Cluster

Cluster Details Description Choose Cluster Provide a name for the job cluster that you want to create. Job Configuration Name Provide a name for the job cluster configuration. Databricks Runtime Version Select the appropriate Databricks version. Worker Type Select the worker type for the job cluster. Workers Enter the number of workers to be used for running the job in the job cluster.

You can either have a fixed number of workers or you can choose autoscaling.

Enable Autoscaling Autoscaling helps in scaling up or down the number of workers within the range specified by you. This helps in reallocating workers to a job during its compute-intensive phase. Once the compute requirement reduces the excess number of workers are removed. This helps control your resource costs. Cloud Infrastructure Details First on Demand Provide the number of cluster nodes that are marked as first_on_demand.

The first_on_demand nodes of the cluster are placed on on-demand instances.

Availability Choose the type of EC2 instances to launch your Apache Spark clusters, from the following options:

-

Spot

-

On-demand

-

Spot with fallback

Zone Identifier of the availability zone or data center in which the cluster resides.

The provided availability zone must be in the same region as the Databricks deployment.

Instance Profile ARN Provide an instance profile ARN that can access the target Amazon S3 bucket. EBS Volume Type The type of EBS volume that is launched with this cluster. EBS Volume Count The number of volumes launched for each instance of the cluster. EBS Volume Size The size of the EBS volume to be used for the cluster. Additional Details Spark Config To fine tune Spark jobs, provide custom Spark configuration properties in key value pairs. Environment Variables Configure custom environment variables that you can use in init scripts. Logging Path (DBFS Only) Provide the logging path to deliver the logs for the Spark jobs. Init Scripts Provide the init or initialization scripts that run during the start up of each cluster. -

-

Select All

-

Node Execution Failed

-

Node Execution Succeeded

-

Node Execution Running

-

Node Execution Rejected

-

With this the job creation is done. You can run the job in multiple ways:

-

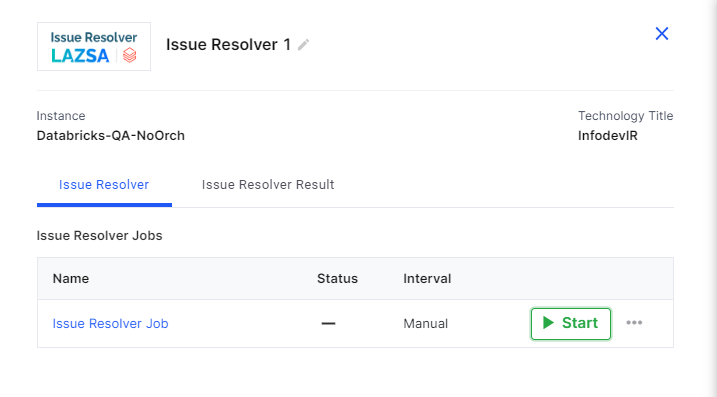

Click the Data Issue Resolver node and click Start to initiate the job run.

-

Publish the pipeline and then click Run Pipeline.

-

-

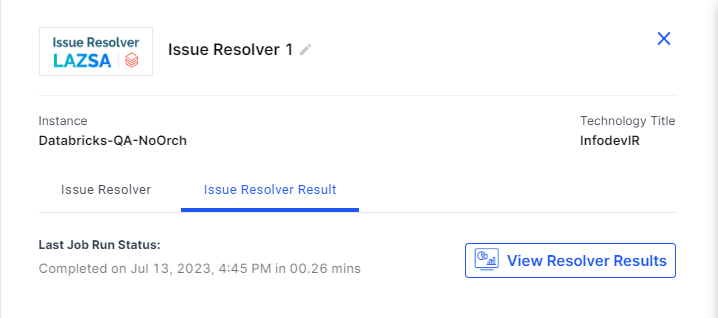

On completion of the job, click the Issue Resolver Result tab and then click View Resolver Results.

-

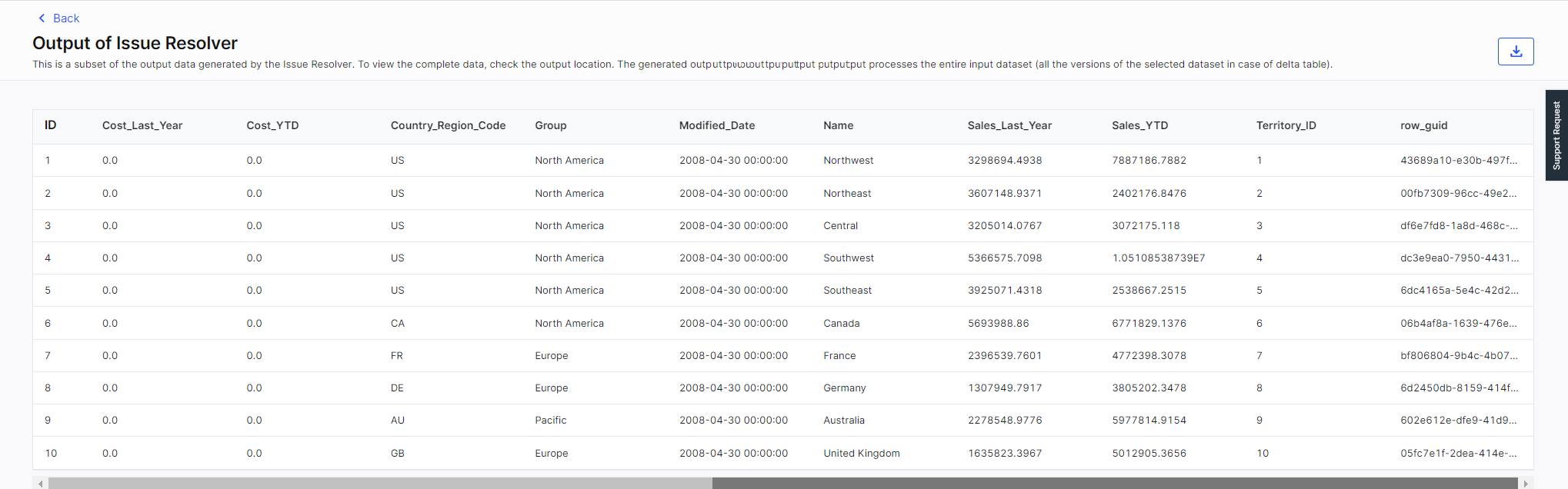

View the output of the Issue Resolver job. Click

to download and save the results of Issue Resolver to a CSV file.

to download and save the results of Issue Resolver to a CSV file.

| SQS and SNS | |

|---|---|

| Configurations - Select an SQS or SNS configuration that is integrated with the Calibo Accelerate platform. | |

|

Events |

|

| Event Details - Select the details of the events for which notifications are enabled. | |

| Additional Parameters - provide any additional parameters to be considered for SQS and SNS queues. |

| What's next? Snowflake Custom Transformation Job |