Data Crawler and Data Catalog

The Calibo Accelerate Data Pipeline Studio (DPS) lets you crawl data from various types of sources and create a data catalog from it, which can be used in the data source stage of a data pipeline. A data crawler fetches metadata along with the data. Creating a data catalog from the data crawler provides wider visibility and deeper access to the data. After you create a data crawler and fetch the data you can filter the data before creating a data catalog.

Data Crawler supports the following types of data sources:

-

Amazon S3

-

CSV

-

FTP

-

SFTP

-

REST API

-

MS Excel

-

Microsoft SQL Server

-

MySQL

-

Oracle database

-

Postgre SQL

-

Snowflake

How do I create a data crawler?

In the Calibo Accelerate platform you can create a data crawler in Data Pipeline Studio which is at the product level or you can create one that can be used across the platform.

To create a data crawler at the platform level

-

Sign in to the Calibo Accelerate platform and click Configuration in the left navigation pane.

-

On the Platform Setup screen, click Data Pipeline Studio.

-

Click + New Crawler.

-

Provide a name for the crawler and click Next.

-

Select a data source from the dropdown and click Save and Crawl. On the crawler screen, you can view the data that was fetched by the crawler.

Can I filter the data from the data crawler before I create a data catalog?

Once you crawl the data from your data source, you can apply certain conditions to the required columns of the tables in order to filter the data according to your use case. For example, if you have the sales data for a product and you want to filter customers based on age, you can use a condition to do that.

-

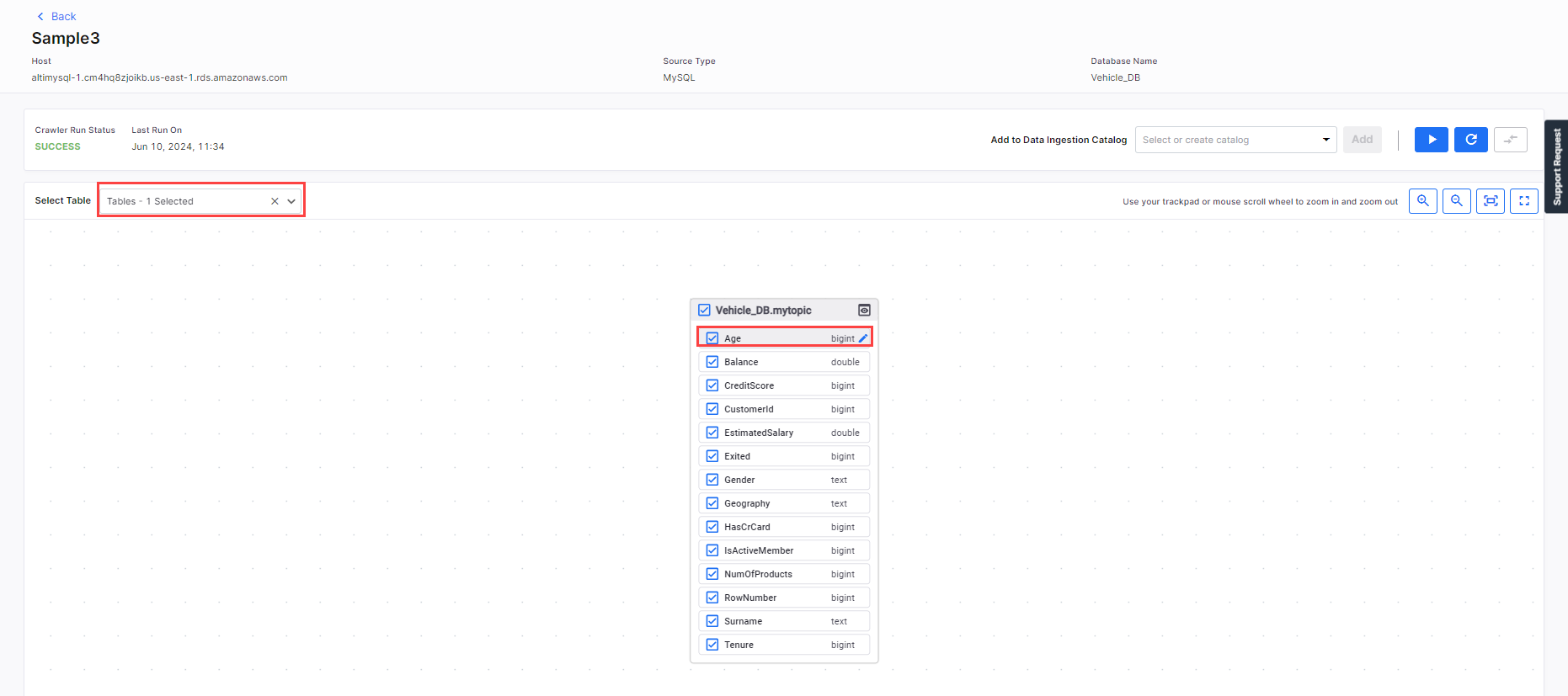

On the Data Crawlers tab of Data Pipeline Studio, click the data crawler for which you want to filter data.

-

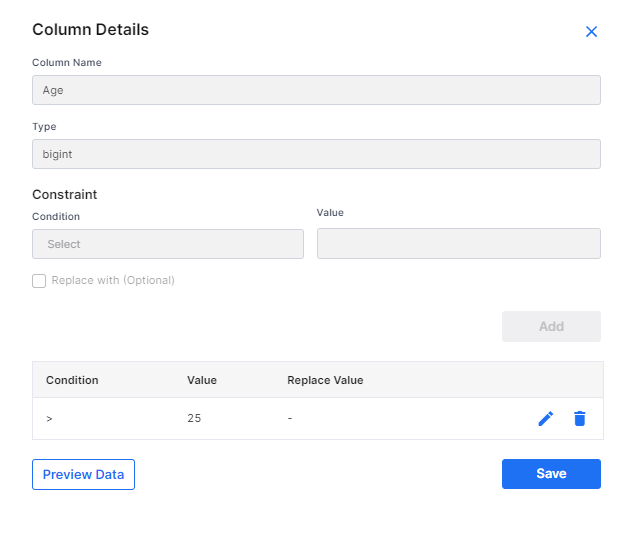

On the data crawler screen, select a table. On the list of columns, notice the pencil icon adjacent to the column name. Click the icon.

-

On the Column Details side drawer, under Constraint, select the following:

-

Condition - Select > (greater than).

-

Value - Enter 25.

-

-

Click Save. The condition and value are added. Close the side drawer.

-

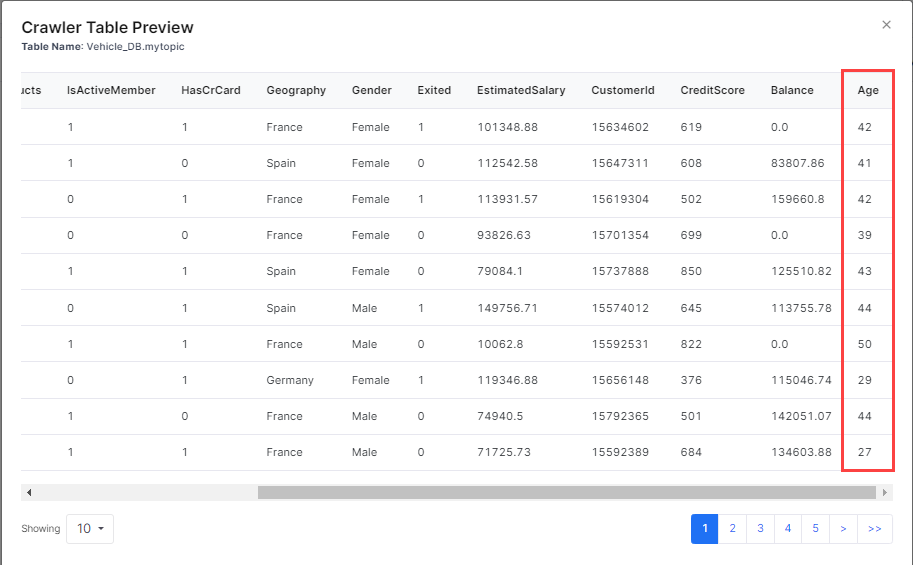

Click the preview icon

of the table to view the filtered data. The preview shows data as per the applied condition.

of the table to view the filtered data. The preview shows data as per the applied condition.

Note:

Currently, the preview opion is only available for RDBMS data crawlers - MSSQL, MySQL, PostgreSQL, Oracle, and Snowflake.

How do I create a data catalog from a data crawler?

-

On the data crawler screen, in the field Add to Data Ingestion Catalog provide a name for the catalog and click Add. The catalog that you created is listed in the Data Ingestion Catalogs tab. You can also view the information about which data crawler is associated with the data catalog.

-

If the data crawler associated with the data catalog is updated, then you can update the data catalog or you can create another version of the data catalog.

| What's next? Data Sources |