Custom Transformation with Azure Data Lake

Calibo Accelerate supports Databricks custom transformation using Azure Data ake as source and as target.

To create a Databricks custom transformation job

-

Sign in to Calibo Accelerate and navigate to Products.

-

Select a product and feature. Click the Develop stage of the feature.

-

Click Data Pipeline Studio.

-

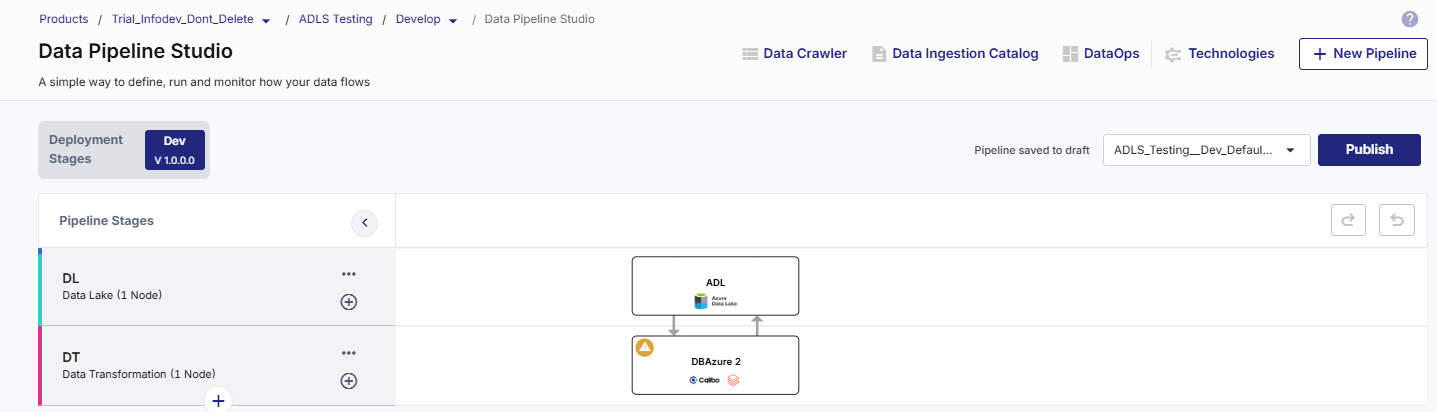

Create a pipeline with the following stages and add the required technologies:

-

Configure the source and target nodes.

-

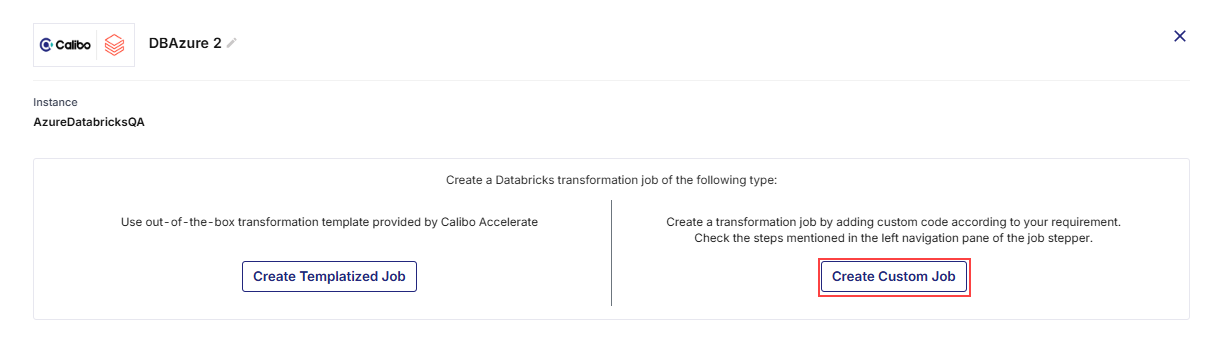

Click the data transformation node and click Create Custom Job.

-

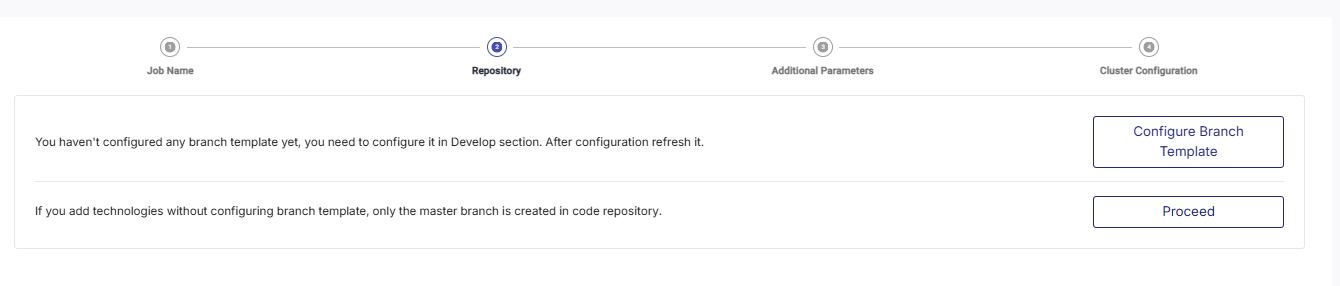

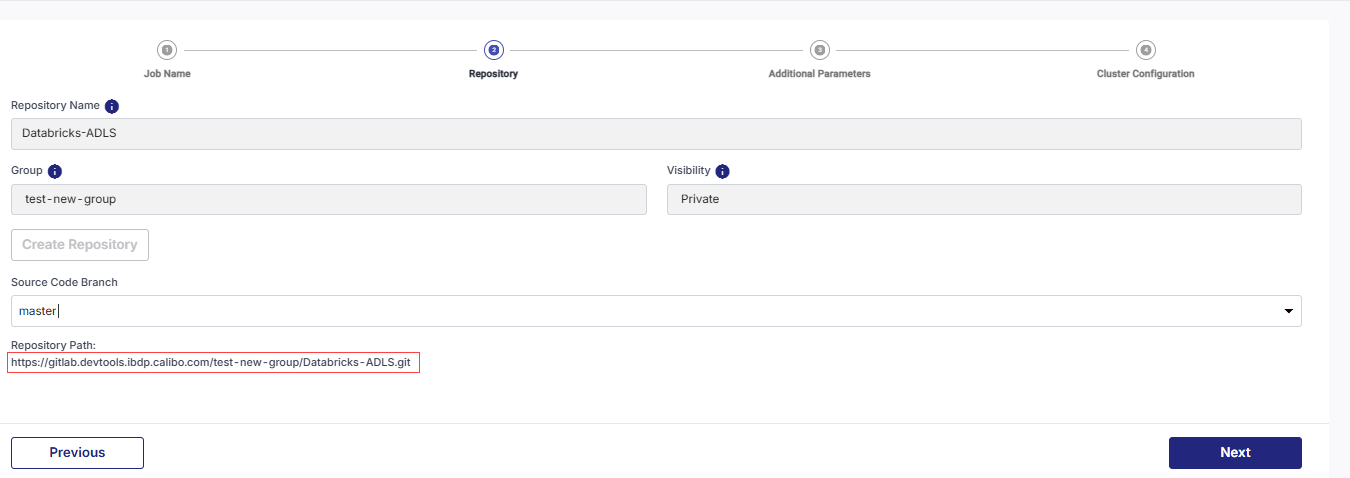

Complete the following steps to create the Databricks custom transformation job:

-

After you create the job, click the job name to navigate to Databricks Notebook.

To add custom code to your transformation job

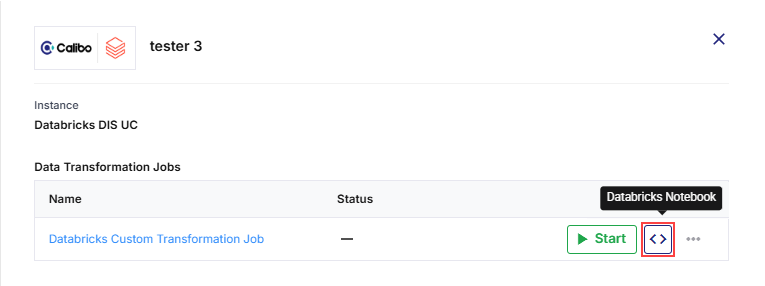

After you have created the custom transformation job, click the Databricks Notebook icon.

This navigates you to the custom transformation job in the Databricks UI. Review the sample code and replace it with your custom code.

To run a custom transformation job

You can run the custom transformation job in one of the following ways:

-

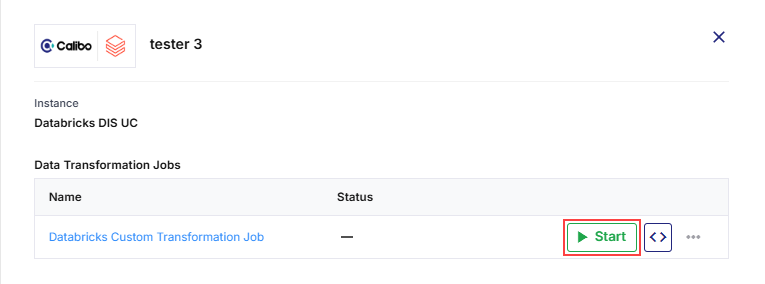

Navigate to Calibo Accelerate and click the Databricks node. Click Start to initiate the job run.

-

Publish the pipeline and then click Run Pipeline.

| What's next? Databricks Custom Transformation Job |